Takeaways

– Prompt injection attacks can bypass Retrieval Augmented Generation (RAG) models, multiplying the risk rather than neutralizing it

– RAG models are vulnerable to prompt injection due to their reliance on external knowledge sources that can be manipulated

– This vulnerability affects a wide range of AI applications that use RAG, including language models, question-answering systems, and knowledge-intensive tasks

– Researchers warn that prompt injection attacks could lead to the generation of false or misleading information, with significant implications for the trustworthiness of AI systems

– Addressing this issue will require advancements in prompt engineering, model architecture, and safety measures to ensure the integrity of AI outputs

RAG Doesn’t Neutralize Prompt Injection. It Multiplies It.

According to a recent report published on Towards AI, Retrieval Augmented Generation (RAG) models, which combine language models with external knowledge sources, are vulnerable to prompt injection attacks. Instead of neutralizing the risk, the researchers found that RAG models can actually multiply the impact of prompt injection, leading to the generation of false or misleading information.

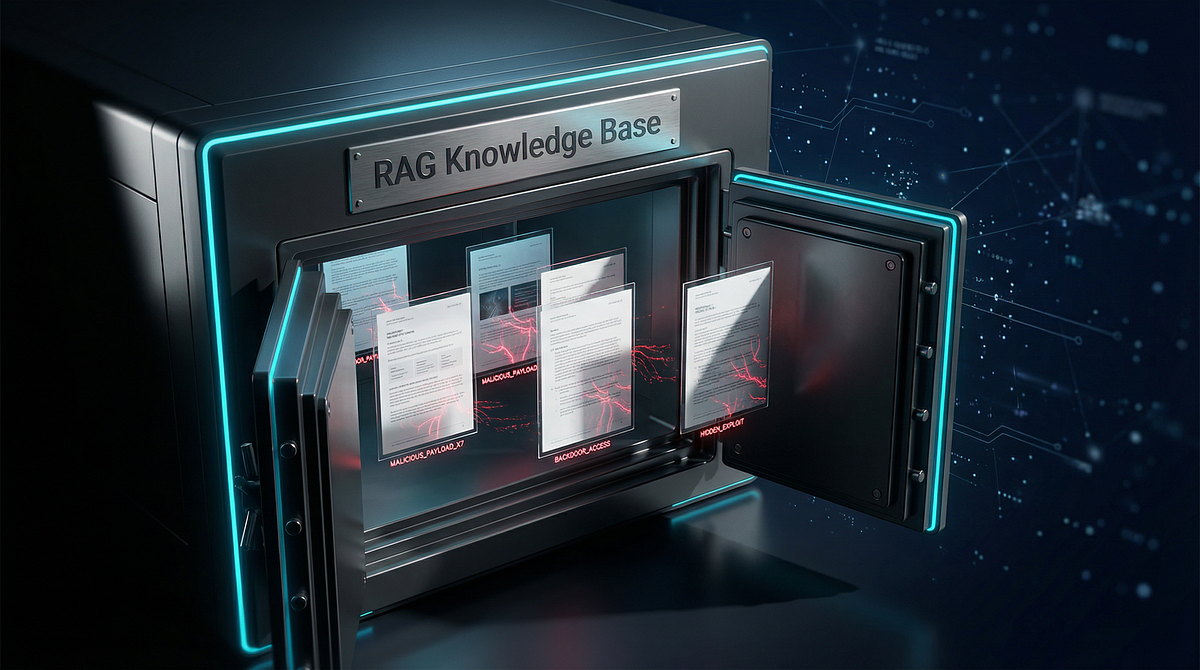

The Vulnerability of RAG Models to Prompt Injection

RAG models rely on external knowledge sources, such as databases or information retrieval systems, to augment their language modeling capabilities. However, this reliance on external information can also be a weakness, as these knowledge sources can be manipulated through prompt injection attacks.

**Prompt Injection Risks:**

– Attackers can craft prompts that exploit vulnerabilities in the external knowledge sources, causing the RAG model to generate false or misleading information

– The combination of the language model and the manipulated external knowledge can amplify the impact of the prompt injection, leading to more convincing and potentially harmful outputs

**Affected AI Applications:**

– Language models

– Question-answering systems

– Knowledge-intensive tasks, such as summarization, dialogue, and information retrieval

The Implications of RAG Vulnerability

The vulnerability of RAG models to prompt injection attacks has significant implications for the trustworthiness and reliability of AI systems that rely on these models.

**Trustworthiness Concerns:**

– Prompt injection could lead to the generation of false or misleading information, undermining the credibility and reliability of AI systems

– This could have far-reaching consequences in domains such as healthcare, finance, and policymaking, where AI-generated outputs are relied upon for decision-making

**Potential Mitigation Strategies:**

– Advancements in prompt engineering techniques to improve the robustness of RAG models against manipulation

– Architectural changes to RAG models to better secure the integration of external knowledge sources

– Deployment of safety measures, such as content filtering and anomaly detection, to identify and mitigate prompt injection attacks

The Path Forward

Addressing the vulnerability of RAG models to prompt injection will be a critical challenge for the AI research community. Developing more secure and trustworthy AI systems that can reliably leverage external knowledge sources will be essential for the widespread adoption and responsible use of these technologies.

Conclusion

The vulnerability of Retrieval Augmented Generation (RAG) models to prompt injection attacks is a significant concern for the AI community. Instead of neutralizing the risk, RAG models can actually multiply the impact of prompt injection, leading to the generation of false or misleading information. Addressing this issue will require advancements in prompt engineering, model architecture, and safety measures to ensure the integrity and trustworthiness of AI systems that rely on external knowledge sources.

FAQ

What is Retrieval Augmented Generation (RAG)?

Retrieval Augmented Generation (RAG) is a type of AI model that combines a language model with external knowledge sources, such as databases or information retrieval systems, to enhance its language modeling capabilities. RAG models are used in a variety of AI applications, including language models, question-answering systems, and knowledge-intensive tasks.

How are RAG models vulnerable to prompt injection attacks?

RAG models rely on external knowledge sources to augment their language modeling capabilities. However, this reliance on external information can also be a weakness, as these knowledge sources can be manipulated through prompt injection attacks. Attackers can craft prompts that exploit vulnerabilities in the external knowledge sources, causing the RAG model to generate false or misleading information.

What are the implications of the RAG vulnerability?

The vulnerability of RAG models to prompt injection attacks has significant implications for the trustworthiness and reliability of AI systems that rely on these models. Prompt injection could lead to the generation of false or misleading information, undermining the credibility and reliability of AI systems in domains such as healthcare, finance, and policymaking, where AI-generated outputs are relied upon for decision-making.

What are some potential mitigation strategies?

Addressing the vulnerability of RAG models to prompt injection will require a multi-faceted approach. Potential mitigation strategies include advancements in prompt engineering techniques to improve the robustness of RAG models against manipulation, architectural changes to RAG models to better secure the integration of external knowledge sources, and the deployment of safety measures, such as content filtering and anomaly detection, to identify and mitigate prompt injection attacks.

Which AI applications are affected by the RAG vulnerability?

The vulnerability of RAG models to prompt injection attacks can affect a wide range of AI applications, including language models, question-answering systems, and knowledge-intensive tasks, such as summarization, dialogue, and information retrieval. Any AI system that relies on RAG models to leverage external knowledge sources could be susceptible to the risks posed by prompt injection attacks.

What is the overall impact of the RAG vulnerability on the trustworthiness of AI systems?

The vulnerability of RAG models to prompt injection attacks has significant implications for the trustworthiness and reliability of AI systems. If left unaddressed, this vulnerability could undermine the credibility of AI-generated outputs, leading to concerns about the integrity and trustworthiness of these systems, especially in high-stakes domains where AI is relied upon for decision-making. Addressing this issue will be a critical challenge for the AI research community to ensure the responsible and reliable deployment of AI technologies.

How would you rate Prompt Injection Amplified by RAG – A Breakthrough AI Innovation?