🎯 KEY TAKEAWAY

If you only take one thing from this, make it these.

Hide

- Hugging Face Transformers is the industry standard for Natural Language Processing, offering thousands of pre-trained models.

- The library solves the problem of model accessibility, allowing complex AI implementation in just a few lines of code.

- It supports a “pay-for-what-you-use” model for cloud hosting, while the open-source library itself remains free.

- The main trade-off is the high computational cost (GPU memory) required to run large models effectively.

- Best suited for developers and researchers who need state-of-the-art accuracy in text generation, classification, and translation.

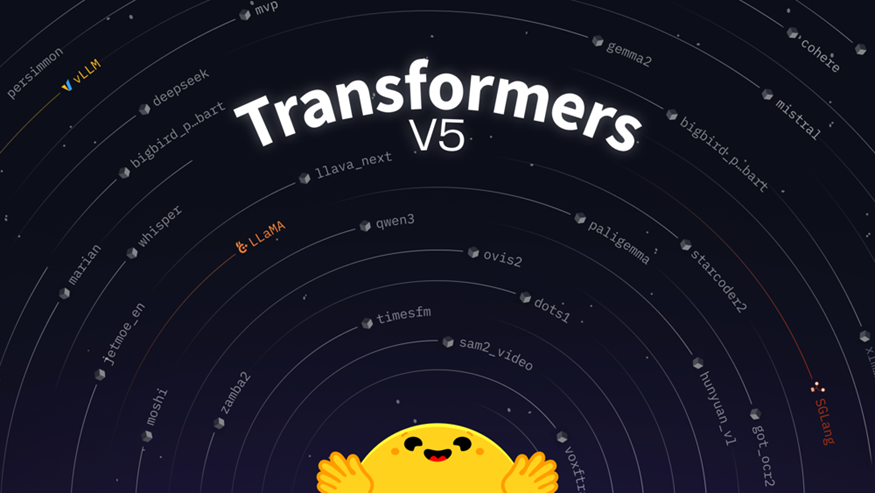

Introduction

The AI community is buzzing with the recent updates surrounding Transformers, the foundational library by Hugging Face that powers the vast majority of today’s open-source Large Language Models (LLMs). While the specific nomenclature “v5” is currently being used by the community to refer to the massive shift towards Transformer-based architectures and the simplification of the `transformers` library, it represents a pivotal moment where state-of-the-art AI becomes accessible to everyone. This tool solves the critical bottleneck of complexity, allowing developers to implement, fine-tune, and deploy models like BERT, GPT, and T5 without building infrastructure from scratch. It is designed for AI researchers, software engineers, and data scientists who want to leverage cutting-edge NLP capabilities without the heavy lifting of training massive models from zero. The key benefit is democratization: access to thousands of pre-trained models that can be integrated into applications with just a few lines of code.

Key Features and Capabilities

Hugging Face Transformers is not just a library; it is an ecosystem. Its standout feature is the `pipeline` API, which abstracts away the complex tokenization and tensor manipulation required to use models. For example, a user can set up a text classification system with three lines of code. The library supports three primary architectures: Tokenizers (for fast text processing), Transformers (the neural network models), and Datasets (for efficient data handling). It boasts a model hub containing over 100,000 pre-trained models covering 26 languages, ranging from text generation to computer vision and audio processing. Unlike building a custom Transformer from scratch, Hugging Face provides fine-tuned checkpoints specifically for tasks like Named Entity Recognition (NER) or Question Answering, drastically reducing the time to production.

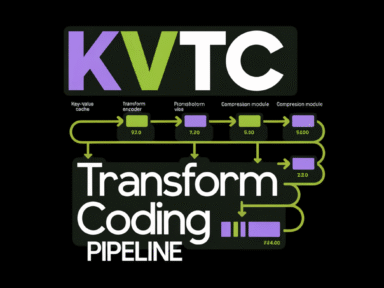

How It Works / Technology Behind It

At its core, the library wraps PyTorch, TensorFlow, and JAX backends to allow for flexible tensor operations. The technology relies on the Transformer architecture, specifically utilizing the “Self-Attention” mechanism to weigh the importance of different words in a sentence relative to one another. The library is designed to be interoperable; a model trained in PyTorch can often be converted to TensorFlow for deployment and vice versa via the “Trainer” API, which handles the entire training loop, logging, and evaluation metrics. This abstraction allows users to focus on data and hyperparameters rather than the mechanics of backpropagation.

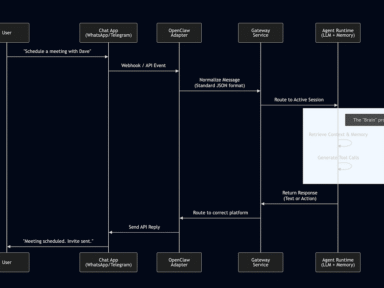

Use Cases and Practical Applications

The practical applications are vast. In the enterprise sector, companies use Transformers to build sophisticated customer support chatbots that understand context and sentiment. For search engine optimization, developers utilize the library to create semantic search engines that understand user intent rather than just keyword matching. In the legal sector, teams fine-tune models to scan thousands of documents for specific clauses (Contract Analysis). A specific example is a developer building a spam filter; instead of training a Naive Bayes classifier, they can download a RoBERTa model fine-tuned for spam detection from the Hub and deploy it instantly for higher accuracy.

Pricing and Plans

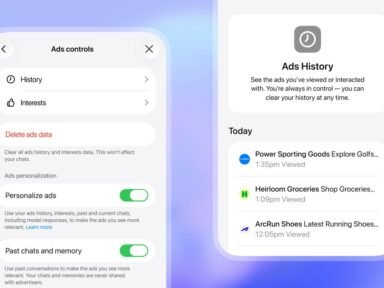

Hugging Face operates on a “freemium” model that is highly generous.

- Free Tier: Unlimited access to public models and datasets. You can run these locally or on your own infrastructure at no cost.

- Pro Account ($9/month): Provides access to private models and datasets, faster download speeds, and community support.

- Enterprise Hub: Custom pricing for organizations needing dedicated infrastructure, SSO, advanced security, and SLAs. They also offer Inference Endpoints, where you pay for compute time (GPU/CPU) to host models in the cloud, typically starting at a few cents per hour depending on the hardware.

Pros and Cons / Who Should Use It

Pros:

- Unmatched Ecosystem: The sheer volume of community-contributed models is a massive advantage.

- Interoperability: Seamless switching between PyTorch and TensorFlow.

- Ease of Use: The `pipeline` API is arguably the best abstraction layer in the industry.

Cons:

- Resource Heavy: Transformer models require significant RAM and VRAM, making them expensive to run on low-end hardware.

- Learning Curve: While the API is simple, understanding the underlying architecture (attention masks, token types) is necessary for debugging.

Who Should Use It:

This library is essential for NLP practitioners, startups building AI features, and researchers needing reproducible results. It is less ideal for simple classification tasks where lightweight models (like SVMs) suffice or for environments with strict hardware limitations.

FAQ

Is Hugging Face Transformers free to use?

Yes, the open-source library and access to public models on the Hub are completely free. You only pay if you subscribe to a Pro account for private repositories or use their paid Inference Endpoints for cloud hosting.

Do I need a GPU to run Transformers?

While you can run smaller models (like DistilBERT) on a standard CPU, a GPU is highly recommended for training or fine-tuning models, and essential for running Large Language Models (LLMs) efficiently.

How does Hugging Face compare to OpenAI’s API?

Hugging Face offers open-source models that you can run locally or on your own cloud, giving you more data privacy and control. OpenAI provides proprietary, closed-source models accessed via API, which are often more powerful but require sending data to their servers.

Can I use Transformers for tasks other than text?

Absolutely. The library supports multimodal capabilities, including Computer Vision (image classification, object detection) and Audio (speech recognition, audio classification).

What support options are available?

Support is primarily community-driven via forums and GitHub. Paid Enterprise plans offer dedicated support, while Pro users get community support with faster response times.

How would you rate Transformers v5: Hugging Face’s Groundbreaking AI Leap?