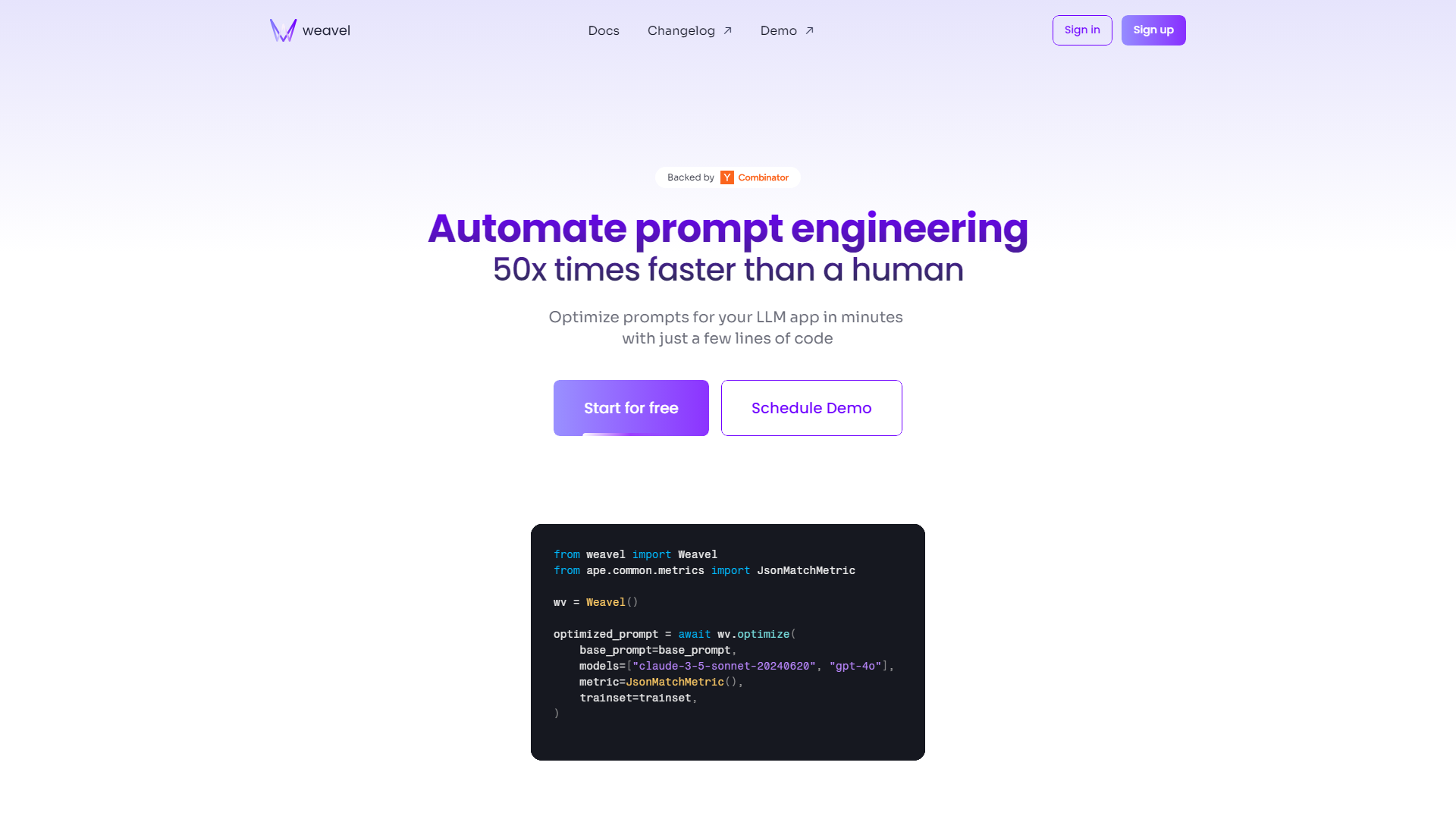

Large Language Models Redefined: Weavel's Ape Automates Prompt Engineering for Peak Performance

Large language models (LLMs) are revolutionizing AI, but crafting effective prompts can be a time-consuming and iterative process. Enter Weavel's Ape, an innovative AI prompt engineering assistant designed to supercharge your LLM applications. Ape streamlines the prompt engineering workflow with advanced tracing, dataset curation, batch testing, and automated evaluations. This powerful tool allows users to continuously optimize prompts using real-world data, preventing performance regressions through seamless CI/CD integration. By incorporating human-in-the-loop scoring, Ape ensures nuanced feedback and significantly improves the reliability of your LLMs. Additionally, it auto-generates evaluation code, simplifying assessments and delivering accurate metrics. With Weavel's Ape, developers and AI researchers can elevate their LLM applications with robust performance metrics and effortless integration.

Skip to content

Skip to content

How would you rate Weavel?