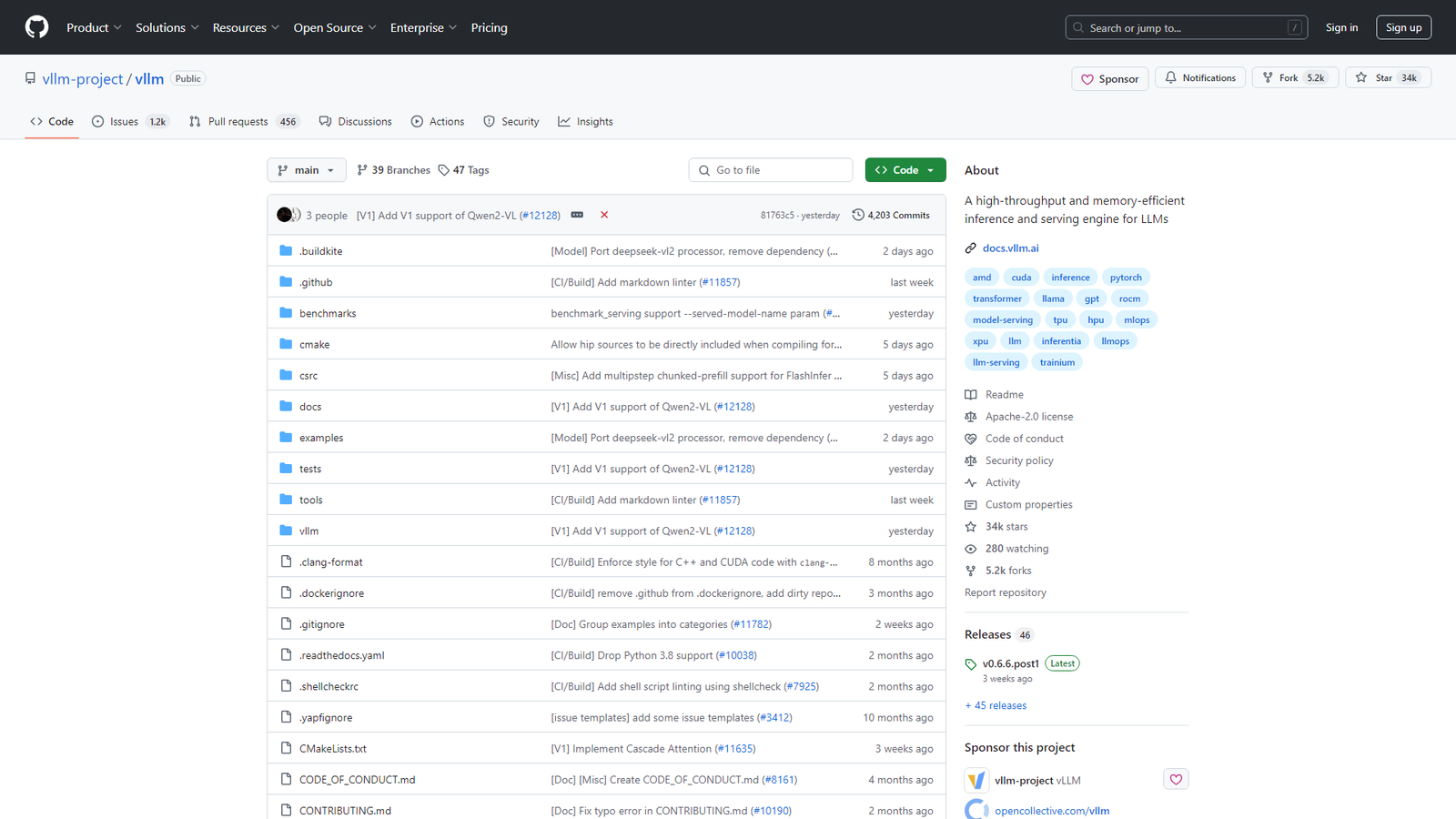

Large Language Models Take Flight with VLLM: The High-Throughput Inference Engine for Accelerated AI Deployments

Large language models (LLMs) are transforming industries, but deploying them efficiently can be a challenge. Enter VLLM, a high-throughput, memory-efficient inference serving engine specifically designed to optimize the performance of LLMs. By effectively managing memory usage, VLLM enables faster response times without compromising performance integrity. This makes it ideal for diverse deployment environments, catering to both small startups and large enterprises. VLLM's support for multi-node configurations further enhances scalability, allowing seamless load management during peak request periods. With VLLM, businesses can harness the power of LLMs with increased speed and efficiency, unlocking new possibilities in AI applications.

Skip to content

Skip to content

How would you rate Vllm?