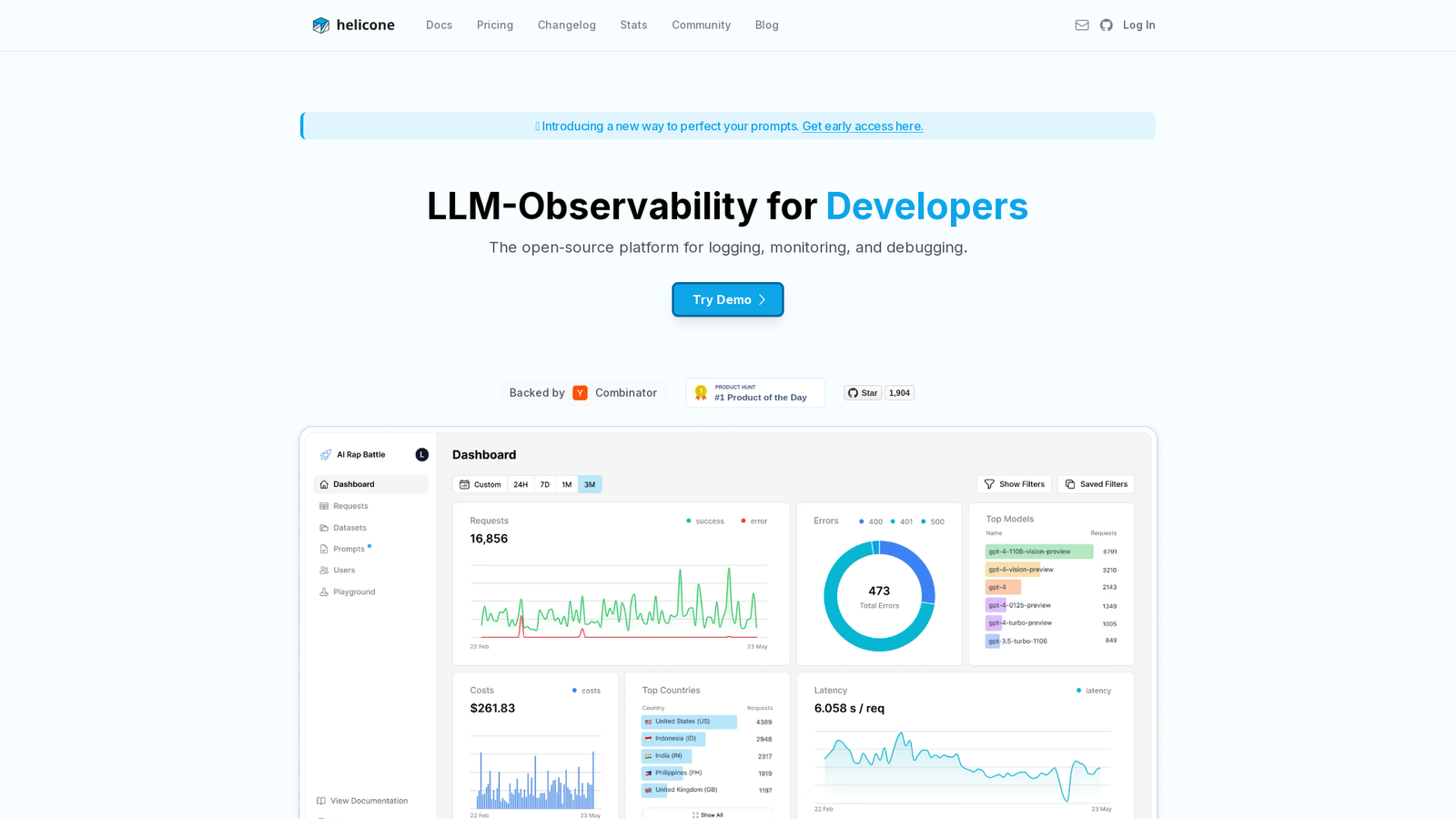

Machine Learning Demystified: Introducing Helicone AI

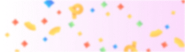

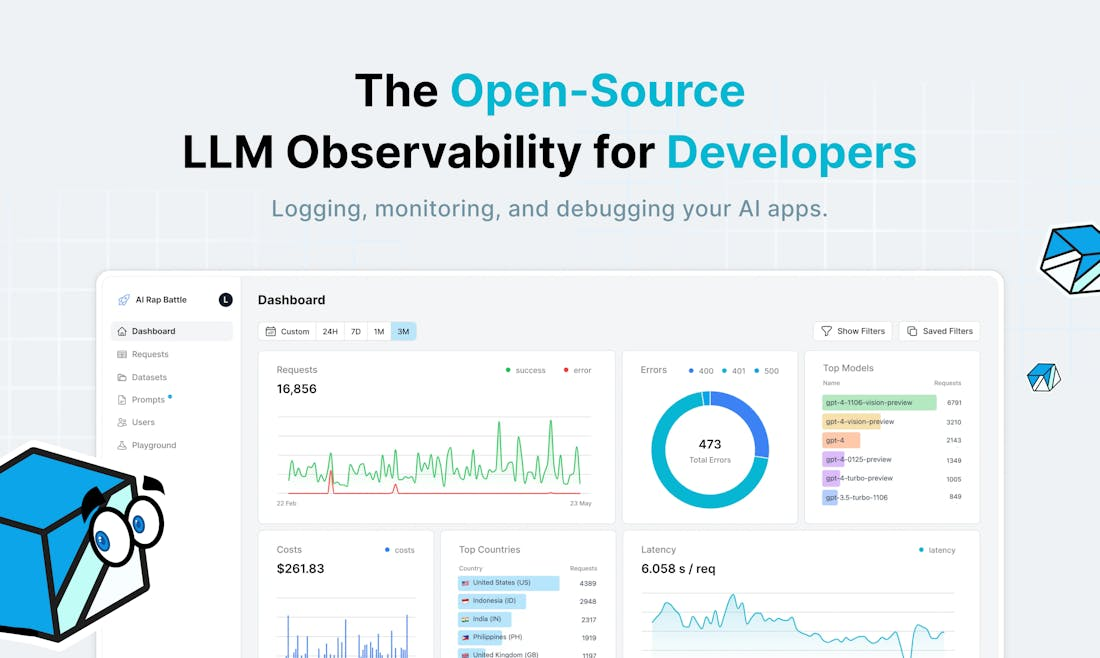

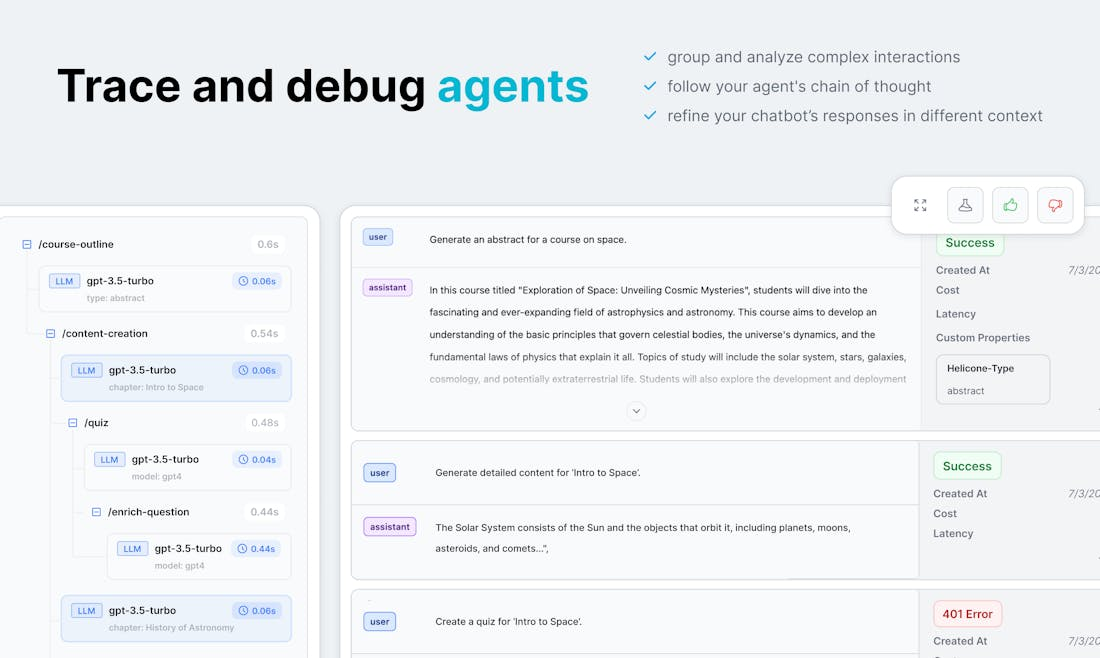

Machine learning models are powerful tools, but understanding their behavior can be a challenge. Helicone AI is an open-source platform designed to simplify this process. Acting as your dedicated AI assistant, Helicone provides a seamless way to log, monitor, and debug your machine learning applications, regardless of whether they involve chatbots, generative AI, or API integrations. With just one line of code, you gain access to invaluable insights like usage tracking, LLM performance metrics, and prompt management – all within a user-friendly interface. Helicone empowers developers to build, analyze, and optimize their AI projects with confidence, making the complex world of machine learning more accessible than ever before.

Pricing

This appears to be a screenshot or text representation of a webpage for the platform "Helicone." It highlights several key features: Core Functionalities: LLM Management and Orchestration: Helicone seems designed to help developers manage, monitor, and deploy various Large Language Models (LLMs). Observability and Monitoring: It emphasizes real-time performance monitoring, including latency, error rates, usage metrics, and cost analysis. Evaluation and Improvement: The platform offers tools for evaluating LLM performance using techniques like "LLM as a Judge" and tracking improvements over time. Key Features Described: Open Source and Community Driven: Helicone promotes transparency and community involvement through open-source code and active engagement on platforms like Discord. Cost Calculator: A tool to compare the costs of different LLM providers and models. Data Privacy and Security: Mentions SOC2 certification and HIPAA compliance, indicating a focus on protecting user data. Integrations: Compatibility with various popular LLM providers (OpenAI, Anthropic, Azure, etc.) Target Audience: The website's language and features suggest Helicone is aimed at developers, researchers, or organizations working with LLMs for applications like: Building AI-powered chatbots or assistants Creating text generation or summarization tools Developing custom machine learning models Let me know if you have any specific questions about Helicone or its features based on this information.

Freemium

Skip to content

Skip to content

How would you rate Helicone AI?