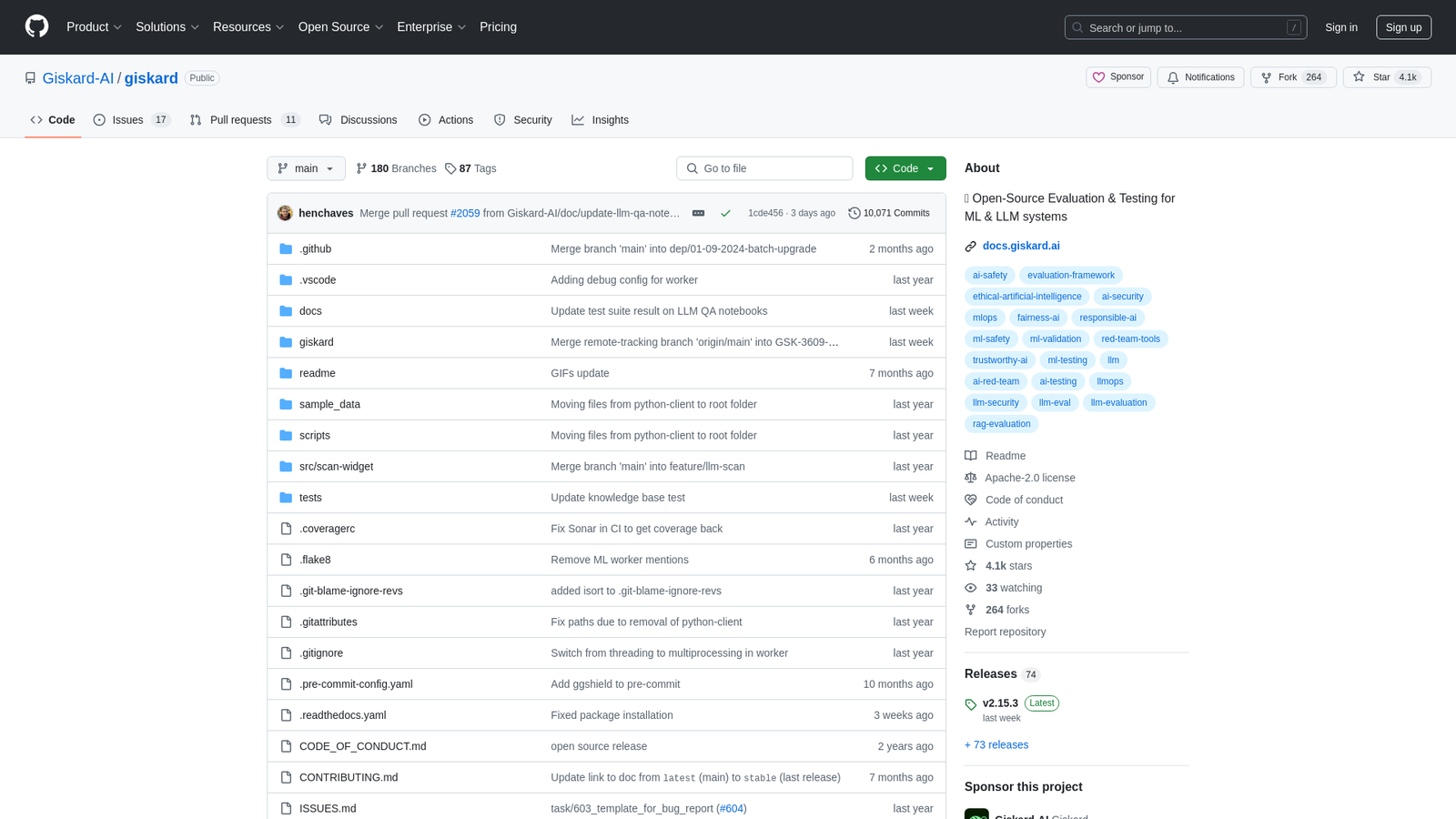

Automation Unleashed: Giskard Streamlines AI Testing at Scale

Generative AI, machine learning, and API AI are rapidly changing the technological landscape. To ensure these powerful systems function reliably and ethically, robust testing is crucial. Enter Giskard, a comprehensive platform designed to automate the testing of large language models (LLMs) and machine learning models at scale. Giskard empowers developers to identify hallucinations and biases within their AI systems automatically, providing invaluable insights for refinement and improvement. Acting as an Enterprise Testing Hub, Giskard seamlessly integrates with popular tools like 🤗, MLFlow, and Weights & Biases, offering both self-hosted and cloud deployment options. Whether you're working with tabular models or cutting-edge LLMs, Giskard simplifies the testing process, enabling you to build more trustworthy and reliable AI applications.

Skip to content

Skip to content

How would you rate Giskard?