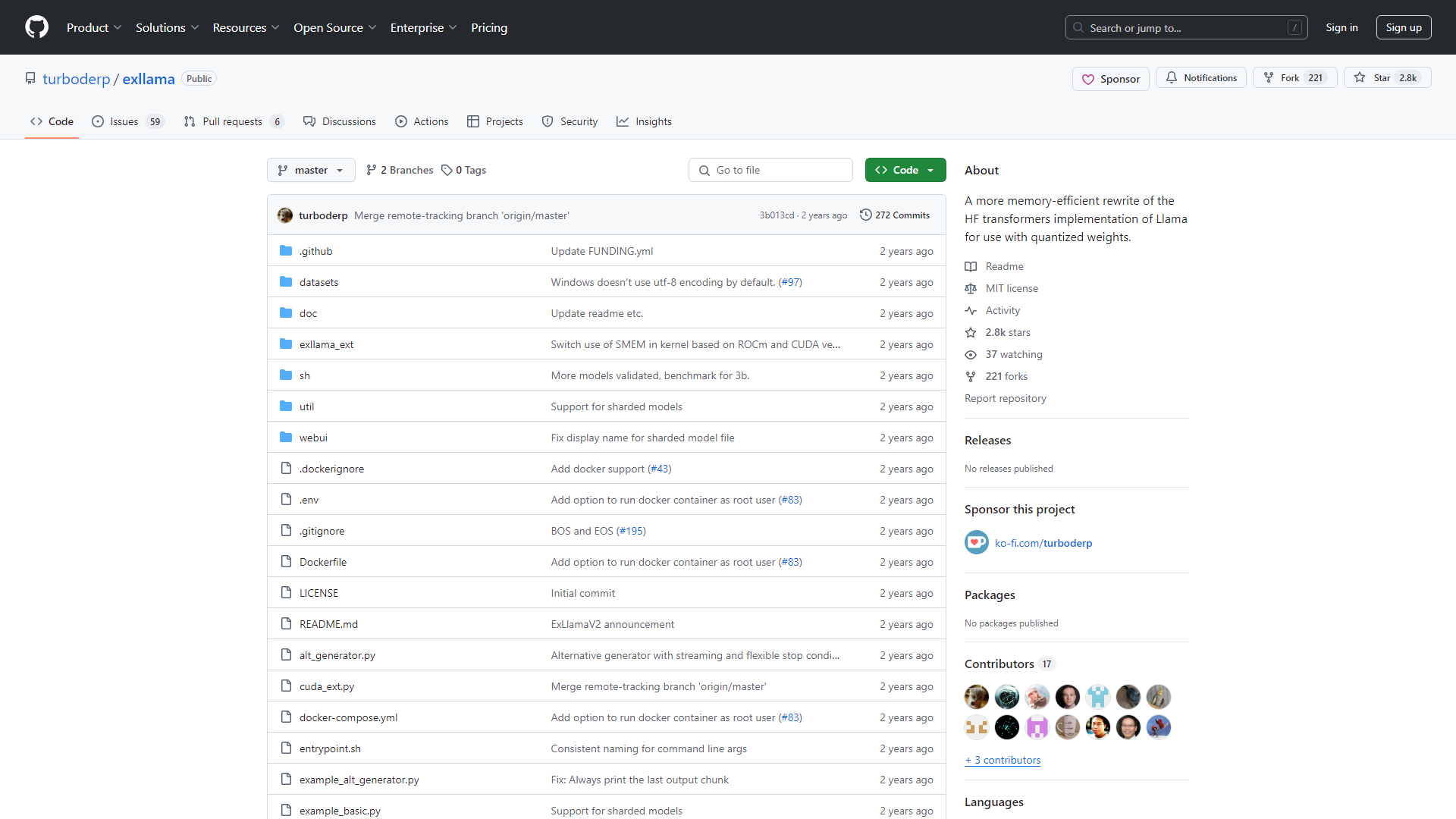

Large Language Models, Developer Tools: Exllama - Unleash the Power of LLaMA with Memory Efficiency

Large language models (LLMs) have revolutionized natural language processing, but their memory demands can be a barrier for developers. Enter Exllama, a powerful new tool designed to bridge this gap. Exllama is a memory-efficient implementation built specifically for leveraging Hugging Face transformers with the LLaMA model using quantized weights. This innovative approach allows for high-performance natural language processing tasks while minimizing memory consumption, making it ideal for modern GPUs like NVIDIA's RTX series. By offering sharded model support, configurable processor affinity for optimal performance, and flexible stop conditions for content generation, Exllama empowers developers and researchers to deploy robust AI models without the typical overhead associated with large transformer architectures.

Skip to content

Skip to content

How would you rate Exllama?