Revolutionizing LLM Evaluation with BenchLLM

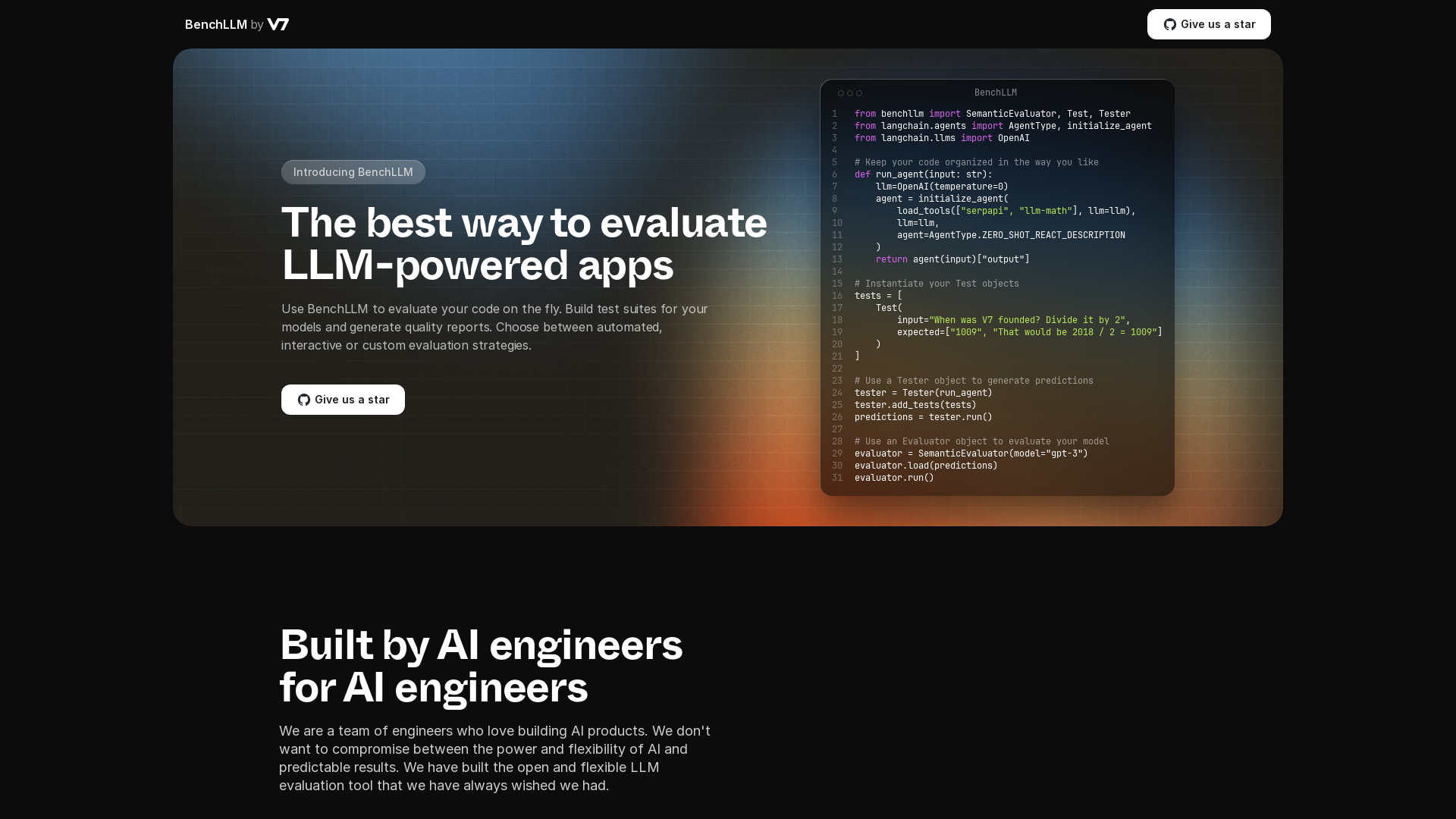

TL;DRBenchLLM has never been more accessible with its innovative approach to large language model (LLM) evaluation. This powerful tool offers automated, interactive, and custom evaluation strategies, making it an essential choice for AI engineers and developers. With BenchLLM, you can build test suites, generate quality reports, and assess the performance of your LLMs using open-source libraries like OpenAI and Langchain. Discover how BenchLLM can transform your approach to AI model development with cutting-edge features like flexible API support, intuitive test definition in JSON or YAML formats, and comprehensive support for continuous integration and continuous deployment (CI/CD) pipelines. Whether you're evaluating GPT-3.5 Turbo or GPT-4, BenchLLM provides the versatility and reliability needed to ensure accurate and reliable outputs from your generative AI models.

2023-07-21

Mastering LLM Evaluation with BenchLLM

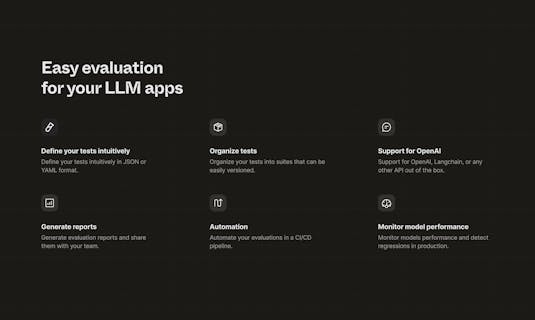

BenchLLM is a game-changer in the realm of Large Language Model (LLM) evaluation, offering a versatile and powerful tool designed to streamline and enhance the evaluation process. This innovative tool provides a unique blend of automated, interactive, and custom evaluation strategies, making it an indispensable asset for AI engineers and developers. By leveraging BenchLLM, users can build comprehensive test suites, generate detailed quality reports, and monitor model performance in real-time. One of the key benefits of BenchLLM is its flexibility, supporting various APIs such as OpenAI and Langchain. This flexibility allows users to integrate the tool seamlessly into their existing workflows, ensuring a smooth and efficient evaluation process. Additionally, BenchLLM's intuitive interface and customizable evaluation methods make it an ideal choice for both beginners and experienced professionals. To provide a more in-depth understanding, here are 8 key features that make BenchLLM an essential tool for LLM evaluation:

BenchLLM offers a blend of automated, interactive, and custom evaluation strategies, allowing developers to conduct comprehensive assessments of their LLM-based applications in real-time.

BenchLLM supports a variety of APIs, including OpenAI and Langchain, making it versatile for evaluating a wide range of LLM-powered applications.

The tool enables developers to build test suites tailored to their specific needs, ensuring that models are thoroughly evaluated and optimized for performance.

BenchLLM uses the SemanticEvaluator model, which leverages OpenAI's GPT-3 for semantic evaluation, providing accurate and reliable results.

Users can define tests using intuitive JSON or YAML formats, making it easy to set up and run evaluations without extensive coding knowledge.

BenchLLM integrates seamlessly with CI/CD pipelines, allowing for continuous monitoring and real-time performance evaluation of LLMs.

The tool generates detailed quality reports, providing insights into model performance and helping developers make informed decisions about their LLMs.

BenchLLM allows for real-time feedback integration, enabling developers to send user feedback directly to the model for continuous improvement and fine-tuning.

- Comprehensive evaluation strategies including automated, interactive, and custom methods

- Flexible API support for various AI tools like OpenAI and Langchain

- Easy installation and getting started process with pip installation

- Comprehensive support for test suite building and quality report generation

- Intuitive test definition in JSON or YAML formats for easy customization

- Lack of user-friendly interface for non-technical users

- Early stage of development with rapid changes

- Potential for high computational resource usage

- Limited support for non-LLM models

- Dependence on specific AI APIs like OpenAI and Langchain for full functionality

Pricing

The pricing details for BenchLLM are not explicitly mentioned in the available sources. However, it is implied to be a subscription-based model designed to support various evaluation strategies for LLM-based applications, with flexible API support for OpenAI, Langchain, and other APIs, as well as integration capabilities with CI/CD pipelines for continuous monitoring.

Subscription

TL;DR

Because you have little time, here's the mega short summary of this tool.BenchLLM is a versatile, open-source tool for evaluating large language models (LLMs), offering automated, interactive, and custom evaluation strategies. It supports integration with various APIs, including OpenAI and Langchain, and provides comprehensive test suite building and quality report generation, making it an indispensable tool for ensuring the optimal performance of LLM-based applications.

FAQ

BenchLLM is an open-source tool designed to evaluate Large Language Models (LLMs). It allows users to test and compare LLMs using automated, interactive, or custom evaluation strategies. BenchLLM supports various APIs like OpenAI and Langchain, enabling developers to build test suites and generate quality reports. Users can specify inputs and expected outputs in JSON or YAML files, and the tool captures predictions based on these inputs, comparing them against expected outputs for performance assessment.

BenchLLM supports three primary evaluation strategies: automated, interactive, and custom. Automated evaluations run tests automatically, while interactive evaluations require manual input from users. Custom evaluations allow users to tailor the evaluation process to their specific needs. Additionally, BenchLLM provides options like semantic comparison using GPT-3 and string matching for detailed evaluation.

Yes, BenchLLM can be integrated with CI/CD pipelines for continuous monitoring of model performance. This integration ensures that model updates are thoroughly tested and evaluated before deployment, helping developers maintain reliable and accurate LLM applications.

The key benefits of using BenchLLM include its flexibility in evaluation strategies, comprehensive test suite building, and detailed quality report generation. It also supports continuous integration, making it ideal for ensuring the optimal performance of language models in real-time. BenchLLM's intuitive test definition in JSON or YAML formats simplifies the testing process.

BenchLLM is free and open-source. However, it is in the early stages of development and may be subject to rapid changes. The tool requires users to set up and manage their own infrastructure, which might limit its use for those without prior experience in AI model evaluation. Nonetheless, it is a powerful tool for developers looking to evaluate and refine their LLM applications.

Skip to content

Skip to content

How would you rate BenchLLM?