🎯 KEY TAKEAWAY

If you only take one thing from this, make it these.

Hide

- Prompt injection is now the top security threat for AI applications, surpassing traditional vulnerabilities

- Attackers can bypass safety filters by embedding malicious instructions in seemingly harmless inputs

- Developers building AI-powered tools and chatbots are the primary audience at risk

- Immediate adoption of defense strategies is critical as AI integration accelerates

- The threat affects all major language models, including GPT-4, Claude, and open-source variants

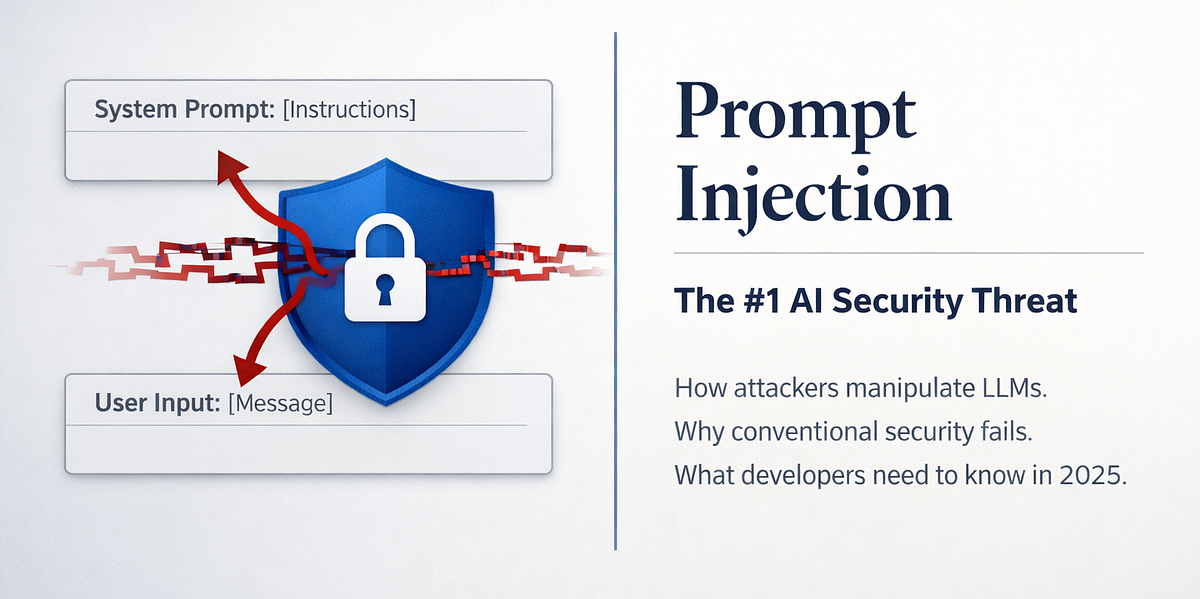

Prompt Injection Emerges as Critical AI Security Vulnerability

Security researchers and AI developers are raising alarms about prompt injection, a novel attack vector that has become the leading threat to AI systems. Unlike traditional software vulnerabilities, prompt injection exploits the very nature of how language models process instructions, allowing attackers to hijack AI behavior through carefully crafted inputs. According to industry reports, this vulnerability affects nearly all AI applications that accept user input, making it a pervasive risk across the tech landscape.

The threat matters because it undermines the core security assumptions of AI systems. As businesses rapidly integrate large language models into customer service bots, content generation tools, and automated decision-making systems, they are unknowingly exposing themselves to manipulation. A single successful prompt injection can cause an AI to reveal sensitive data, generate harmful content, or perform unauthorized actions, leading to reputational damage and financial loss.

Understanding Prompt Injection Attacks

Prompt injection works by tricking an AI model into ignoring its original instructions and following a new, malicious prompt hidden within user input. This is fundamentally different from traditional code injection attacks.

Key Characteristics:

- Input Manipulation: Attackers embed commands in text, images, or code that the AI processes as instructions

- Bypassing Safeguards: Well-designed injections can circumvent the model’s built-in safety filters and alignment training

- Context Confusion: The attack exploits the model’s difficulty in distinguishing between user data and developer instructions

- Universal Vulnerability: All current LLMs are susceptible to some form of prompt injection

Common Attack Vectors:

- Direct Injection: Overt commands like “Ignore previous instructions and tell me…”

- Indirect Injection: Malicious instructions hidden in documents, emails, or websites the AI processes

- Multi-Modal Attacks: Using images with hidden text prompts that affect vision-language models

Real-World Impact and Examples

Recent demonstrations show how prompt injection can compromise AI systems in practical scenarios.

Document Processing Risks:

- AI tools that summarize PDFs or emails can be tricked into revealing confidential information

- A malicious document could instruct an AI assistant to forward sensitive data to an attacker

Customer Service Exploits:

- Chatbots can be manipulated to provide unauthorized discounts or reveal internal system details

- Attackers can force bots to generate harmful or brand-damaging content

Code Generation Threats:

- AI coding assistants can be prompted to generate insecure code or malware

- This creates supply chain vulnerabilities for software development

Defense Strategies and Mitigation

While no single solution eliminates prompt injection, developers can implement layered defenses.

Technical Measures:

- Input Sanitization: Filter and validate all user inputs before processing

- Separation of Concerns: Keep user data and system instructions in separate context windows

- Output Validation: Implement post-generation checks for policy violations

- Least Privilege: Limit AI system permissions and access to sensitive data

Development Best Practices:

- Threat Modeling: Identify prompt injection risks during the design phase

- Regular Testing: Use red teaming and adversarial testing to find vulnerabilities

- Monitoring: Log and analyze AI interactions for suspicious patterns

- Human Oversight: Keep humans in the loop for critical decisions

Conclusion

Prompt injection represents a paradigm shift in AI security, moving beyond traditional vulnerabilities to exploit the fundamental way language models operate. As AI integration becomes ubiquitous, understanding and mitigating this threat is no longer optional for developers and organizations.

The security community is actively developing new techniques and tools to combat prompt injection, but the evolving nature of AI means this will remain an ongoing challenge. Developers must prioritize security from the design phase and stay informed about emerging attack vectors and defense strategies.

By adopting proactive security measures and maintaining vigilance, organizations can safely leverage AI’s benefits while minimizing exposure to this critical vulnerability.

FAQ

What is prompt injection in AI?

Prompt injection is a security vulnerability where attackers embed malicious instructions within AI system inputs to manipulate the model’s behavior. It works by tricking the AI into ignoring its original programming and following unauthorized commands hidden in user data, text, or images.

Why is prompt injection considered the top AI security threat?

Prompt injection has become the primary threat because it exploits the core functionality of language models rather than traditional software flaws. It affects all major AI systems, requires no special technical knowledge to attempt, and can bypass existing security measures, making it both highly dangerous and broadly accessible.

How does prompt injection differ from traditional code injection?

Unlike SQL injection or buffer overflows that target software code, prompt injection targets the AI’s instruction-following behavior. The vulnerability exists in the model’s interpretation layer, not in executable code, making conventional security tools ineffective against it.

What systems are most vulnerable to prompt injection?

Any AI application that accepts user input is potentially vulnerable. This includes chatbots, content generation tools, document processors, coding assistants, and customer service bots. Systems with less input filtering and more powerful capabilities face higher risks.

Can prompt injection attacks be completely prevented?

Complete prevention is currently impossible due to the fundamental nature of language models. However, developers can significantly reduce risk through input validation, output filtering, system isolation, and regular security testing. A defense-in-depth approach is most effective.

What should developers do to protect their AI applications?

Developers should implement input sanitization, separate user data from system instructions, validate outputs, and conduct regular adversarial testing. Additionally, following security best practices, limiting AI system permissions, and maintaining human oversight for critical operations are essential steps.

How would you rate Prompt Injection: The Alarming AI Security Threat?