🎯 KEY TAKEAWAY

If you only take one thing from this, make it these.

Hide

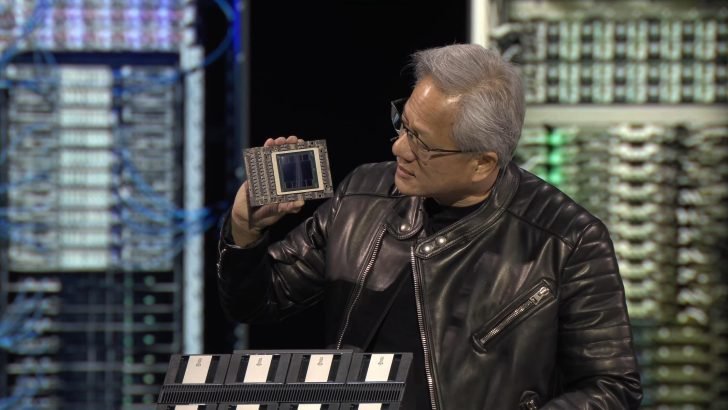

- NVIDIA announced new inference performance data from SemiAnalysis showing Blackwell Ultra delivers up to 50x better performance than previous generations

- The data highlights significant cost savings and efficiency gains for deploying agentic AI applications at scale

- Enterprise AI teams and cloud providers stand to benefit from reduced operational costs and higher throughput

- This performance leap positions NVIDIA’s Blackwell architecture as the foundation for next-generation AI infrastructure

- The findings validate NVIDIA’s roadmap for advancing AI inference capabilities

NVIDIA Blackwell Ultra Delivers 50x Performance Boost for Agentic AI

NVIDIA released new performance data from SemiAnalysis on January 15, 2024, showing its Blackwell Ultra architecture delivers up to 50x better inference performance compared to previous generations. The data demonstrates significant improvements in cost efficiency and throughput for deploying agentic AI applications. According to NVIDIA’s blog post, these gains enable enterprises to run more complex AI models at lower operational costs, making advanced AI more accessible for real-world applications.

Performance Breakthrough for AI Inference

SemiAnalysis conducted extensive testing of NVIDIA’s Blackwell Ultra architecture, revealing dramatic performance improvements across key metrics:

Performance and Capabilities:

- Delivers up to 50x better inference performance compared to previous NVIDIA generations

- Optimized for agentic AI applications requiring real-time decision making and complex reasoning

- Offers significantly improved cost efficiency, reducing operational expenses for large-scale AI deployments

Cost and Efficiency Metrics:

- Performance-per-dollar improvements enable more cost-effective AI infrastructure

- Higher throughput allows enterprises to serve more users with fewer resources

- Reduced latency improves user experience for interactive AI applications

Enterprise Benefits:

- Lower total cost of ownership for AI workloads

- Ability to deploy more sophisticated AI models without prohibitive costs

- Improved scalability for growing AI application demands

Impact on AI Infrastructure and Enterprise Adoption

This performance data has significant implications for the broader AI ecosystem and enterprise adoption strategies:

Market implications:

- Cost reduction: Dramatic performance-per-dollar gains make advanced AI more accessible

- Infrastructure efficiency: Higher throughput reduces hardware requirements for AI workloads

- Competitive advantage: Enterprises can deploy more sophisticated AI capabilities faster

Enterprise adoption factors:

- Scalability: Improved performance enables larger-scale AI deployments

- Cost control: Reduced operational expenses address budget constraints

- Innovation acceleration: Lower costs enable experimentation with more complex AI models

What Happens Next

NVIDIA will continue optimizing Blackwell Ultra for diverse AI workloads, with ongoing performance improvements expected throughout 2024. Enterprises should evaluate how these performance gains can accelerate their AI deployment strategies. Cloud providers are likely to integrate these capabilities into their service offerings, making the performance benefits broadly available to customers.

Conclusion

NVIDIA’s Blackwell Ultra architecture represents a significant leap forward in AI inference performance, delivering up to 50x improvements that make agentic AI more practical and cost-effective for enterprises. The data from SemiAnalysis validates NVIDIA’s approach to advancing AI infrastructure capabilities.

As AI applications become more complex and widespread, these performance gains will enable broader adoption and more sophisticated implementations across industries. Enterprises should monitor how these capabilities evolve and consider them in their AI infrastructure planning for 2024 and beyond.

FAQ

What is NVIDIA Blackwell Ultra?

NVIDIA Blackwell Ultra is the company’s latest GPU architecture designed specifically for AI inference workloads. It builds on the Blackwell architecture with optimizations that deliver up to 50x better performance for running AI models in production environments.

How does the 50x performance improvement compare to previous generations?

The performance gain is measured against NVIDIA’s previous generation of inference hardware. This represents one of the largest generational improvements in AI inference performance, enabling more complex AI models to run with lower latency and higher throughput.

What types of AI applications benefit most from Blackwell Ultra?

Agentic AI applications that require real-time decision making and complex reasoning see the greatest benefits. This includes conversational AI, recommendation systems, fraud detection, and other applications requiring rapid inference on large language models.

When will Blackwell Ultra be available to enterprises?

NVIDIA has not specified a exact availability date in this announcement. Typically, new GPU architectures become available through cloud providers first, followed by general availability to enterprise customers through server manufacturers and NVIDIA’s partners.

How does this affect the cost of running AI workloads?

The improved performance-per-dollar means enterprises can achieve more with less hardware. This translates to lower operational costs for cloud-based AI services and reduced capital expenditure for on-premises deployments.

What role does SemiAnalysis play in this announcement?

SemiAnalysis is an independent research firm that conducted performance testing of NVIDIA’s Blackwell Ultra architecture. Their data provides third-party validation of NVIDIA’s performance claims, giving enterprises additional confidence in the reported improvements.

How would you rate Breakthrough NVIDIA Blackwell Ultra Delivers Staggering 50x AI Performance Boost?