🎯 KEY TAKEAWAY

If you only take one thing from this, make it these.

Hide

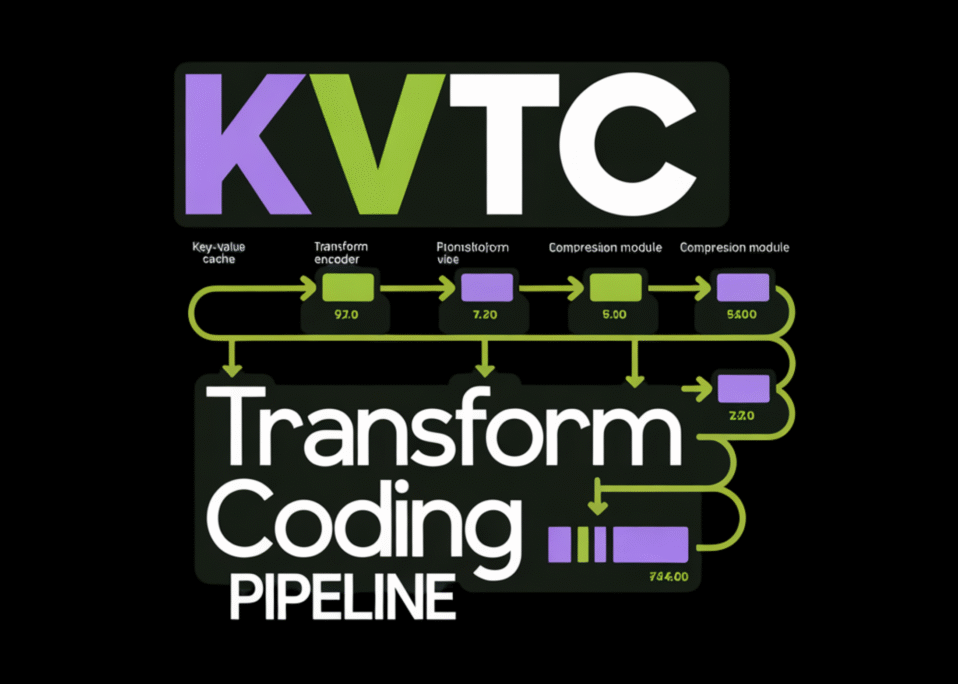

- NVIDIA researchers introduced the KVTC transform coding pipeline to compress key-value caches by 20x

- The method reduces memory bandwidth requirements for large language model inference

- This enables more efficient LLM serving and potentially lower costs for deployment

- The technology targets enterprise AI infrastructure and cloud providers

- Research was published in February 2026 as a breakthrough in inference optimization

NVIDIA Introduces KVTC Transform Coding Pipeline for 20x Cache Compression

NVIDIA researchers announced on February 10, 2026, a new transform coding pipeline called KVTC that compresses key-value caches by 20 times for efficient LLM serving. This breakthrough addresses the critical memory bandwidth bottleneck in large language model inference, making deployment more cost-effective. The technique significantly reduces the memory footprint required during inference, enabling larger models or more concurrent users on the same hardware.

KVTC Transform Coding Pipeline Details

The KVTC pipeline introduces a novel approach to compressing the key-value caches that accumulate during LLM inference:

Technical Implementation:

- Transform coding method: Applies specialized compression transforms to key-value pairs

- 20x compression ratio: Reduces cache size by twenty times while preserving model accuracy

- Memory bandwidth reduction: Drastically lowers data transfer requirements between memory and compute units

- Inference optimization: Designed specifically for serving LLMs in production environments

Performance and Capabilities:

- Enhanced efficiency: Enables serving larger models with the same GPU memory capacity

- Cost reduction: Potentially lowers operational costs for cloud providers and enterprises

- Scalability improvements: Allows more concurrent inference requests per GPU

- Model compatibility: Works with various transformer-based architectures

Research Context:

- Published by: NVIDIA Research team

- Announcement date: February 10, 2026

- Target application: Enterprise AI infrastructure and cloud LLM serving

Impact on LLM Serving and Industry

This innovation addresses a fundamental challenge in deploying large language models at scale:

Enterprise implications:

- Cost efficiency: Reduced memory requirements translate to lower hardware and operational expenses

- Deployment flexibility: Enables running larger models or more instances on existing infrastructure

- Performance gains: Faster inference due to reduced memory bandwidth constraints

Market dynamics:

- Cloud providers: Could offer more competitive LLM services with improved economics

- AI startups: More accessible deployment of sophisticated models with limited resources

- Research community: Advances in efficient inference techniques for future model development

What’s Next for KVTC Technology

NVIDIA’s research represents a significant step toward more efficient LLM deployment. The 20x compression ratio could transform how enterprises approach inference workloads, making advanced AI more accessible. Future developments may include integration into NVIDIA’s software stack and hardware optimizations for the technique.

Conclusion

NVIDIA’s KVTC transform coding pipeline represents a breakthrough in LLM inference efficiency, achieving 20x compression of key-value caches. This innovation directly addresses memory bandwidth bottlenecks that limit model deployment scale and cost-effectiveness.

The technology has significant implications for cloud providers, enterprises, and AI startups by reducing operational costs and enabling larger models on existing hardware. As NVIDIA continues to develop efficient inference techniques, KVTC could become a standard component in production LLM serving infrastructure, making advanced AI more accessible across industries.

FAQ

What is KVTC transform coding pipeline?

KVTC is a new compression technique developed by NVIDIA researchers that reduces key-value cache sizes by 20 times during LLM inference. The transform coding pipeline applies specialized compression methods to key-value pairs, significantly lowering memory bandwidth requirements while maintaining model accuracy.

How does KVTC improve LLM serving efficiency?

By compressing caches by 20x, KVTC reduces the memory footprint needed for inference, allowing larger models or more concurrent users on the same GPU hardware. This directly addresses the memory bandwidth bottleneck that limits serving performance and increases costs.

What are the main benefits of this compression technique?

The primary benefits include 20x reduction in cache size, lower memory bandwidth requirements, improved cost efficiency for deployment, and better scalability for serving LLMs. These improvements make it more economical to run large language models in production environments.

When was this research announced?

NVIDIA researchers introduced the KVTC transform coding pipeline on February 10, 2026, according to the announcement. The research represents a recent advancement in inference optimization techniques for large language models.

Who benefits most from KVTC technology?

Cloud providers, enterprises deploying AI applications, and AI startups stand to benefit most from this technology. Any organization running LLM inference workloads could see improved cost efficiency and better performance from KVTC’s compression approach.

What comes next for this technology?

The research may lead to integration into NVIDIA’s software stack and hardware optimizations in future products. Further development could include broader compatibility with different model architectures and potential adoption in production inference systems.

How would you rate NVIDIA’s Groundbreaking 20x LLM Cache Compression Breakthrough?