Introduction

NVIDIA has introduced VibeTensor, an AI-generated deep learning runtime built end-to-end by coding agents. This innovative tool is designed to streamline the model deployment process by automating the complex steps of converting trained models into optimized runtime environments. It is primarily aimed at AI engineers, researchers, and developers who need to deploy models efficiently without the traditional overhead of manual tuning and configuration. By leveraging AI coding agents, VibeTensor promises to reduce development cycles and improve performance, making it a significant addition to the deep learning ecosystem.

Key Features and Capabilities

VibeTensor offers a suite of features focused on automation and performance. Its core capability is the autonomous generation of runtime kernels tailored to specific hardware architectures. This means the tool can dynamically create optimized code for NVIDIA GPUs, ensuring models run at peak efficiency. It supports a wide range of deep learning frameworks, including PyTorch and TensorFlow, allowing users to integrate it into existing workflows seamlessly.

Another standout feature is its ability to perform auto-tuning. Instead of relying on static configurations, VibeTensor uses AI to explore the parameter space and find the best settings for latency and throughput. This is particularly useful for deploying models in production environments where resource constraints are tight. For example, a user could deploy a large language model (LLM) and have VibeTensor automatically adjust the kernel configurations to minimize inference time on a specific GPU type.

How It Works / Technology Behind It

The technology behind VibeTensor is rooted in AI-driven code generation and optimization. It utilizes a pipeline of coding agents that analyze the model architecture and target hardware to generate custom runtime code. This process begins with a high-level representation of the model, which the agents then decompose into low-level operations. These operations are optimized through a series of iterations, testing different configurations to achieve the best performance.

Unlike traditional runtimes that rely on pre-compiled libraries, VibeTensor creates tailored solutions on the fly. This approach is similar to how AutoML systems optimize model architectures, but VibeTensor focuses specifically on the runtime layer. It bridges the gap between model training and deployment, ensuring that the final product is both fast and resource-efficient. The underlying AI agents are trained on a vast dataset of optimization strategies, enabling them to handle diverse model types and hardware setups.

Use Cases and Practical Applications

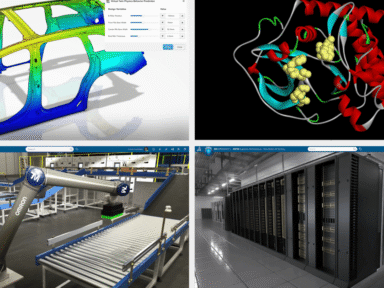

VibeTensor is well-suited for a variety of real-world scenarios. In autonomous driving, for instance, it can be used to deploy perception models with minimal latency, ensuring timely decision-making. For cloud-based AI services, it helps in maximizing GPU utilization, reducing costs while maintaining high throughput. Another application is in edge computing, where resource constraints are critical; VibeTensor can generate lightweight runtimes that fit within the memory and power limits of edge devices.

Consider a scenario where a company needs to deploy a custom computer vision model for quality control on a manufacturing line. Using VibeTensor, they can automate the runtime optimization, ensuring the model runs efficiently on their specific hardware without manual intervention. This not only speeds up deployment but also ensures consistent performance across different production environments.

Pricing and Plans

As of now, specific pricing details for VibeTensor are not publicly available. Since it is a release from NVIDIA, it is likely to be integrated into their existing ecosystem, such as the NVIDIA AI Enterprise suite or available through the NVIDIA GPU Cloud (NGC). Users should check the official NVIDIA website or contact their sales team for the most accurate pricing information. Given NVIDIA’s typical model, there may be a free tier for experimentation and enterprise plans for large-scale deployments.

Pros and Cons / Who Should Use It

**Pros:**

– **Automation:** Reduces the need for manual optimization, saving time and effort.

– **Performance:** Generates hardware-specific code that maximizes GPU efficiency.

– **Flexibility:** Supports multiple frameworks and can adapt to various hardware setups.

– **Scalability:** Suitable for both research prototyping and production deployment.

**Cons:**

– **Learning Curve:** While automated, users still need a basic understanding of deep learning and deployment workflows.

– **Hardware Dependency:** Primarily optimized for NVIDIA GPUs, which may limit users without access to such hardware.

– **Early Stage:** As a new release, it may lack the extensive community support of more established tools.

**Who Should Use It:**

– **AI Engineers and Researchers** looking to accelerate model deployment.

– **Organizations** deploying AI at scale, especially in production environments.

– **Developers** working with NVIDIA hardware who want to optimize inference performance.

Comparison with Alternatives

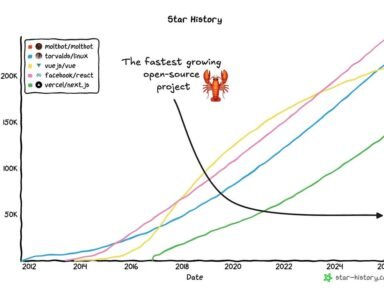

VibeTensor competes with other deep learning runtimes and optimization tools like TensorRT, ONNX Runtime, and Apache TVM. While TensorRT is also from NVIDIA and focuses on GPU optimization, VibeTensor distinguishes itself by using AI agents for code generation, potentially offering more adaptability. Compared to ONNX Runtime, which is framework-agnostic but requires manual tuning, VibeTensor automates the process. Apache TVM is a powerful open-source alternative but often requires significant expertise to use effectively. VibeTensor’s key advantage is its end-to-end automation, making it accessible to users who may not have deep expertise in low-level optimization.

*takeaways

– VibeTensor automates deep learning runtime generation using AI coding agents, reducing deployment time.

– It is designed for NVIDIA GPU users, offering significant performance gains through hardware-specific optimizations.

– Ideal for production environments where latency and resource efficiency are critical, such as autonomous driving and cloud services.

– Pricing is not yet publicly available; users should check NVIDIA’s official channels for details.

– Compared to alternatives like TensorRT and ONNX Runtime, VibeTensor provides greater automation but is tied to NVIDIA hardware.

*takeaways

FAQ

What is VibeTensor and how does it differ from traditional deep learning runtimes?

VibeTensor is an AI-generated deep learning runtime that uses coding agents to automatically create optimized code for model deployment. Unlike traditional runtimes that rely on static libraries, VibeTensor dynamically generates hardware-specific kernels, improving performance and reducing manual tuning efforts.

Is VibeTensor suitable for beginners in deep learning?

While VibeTensor automates many complex steps, users should have a basic understanding of deep learning models and deployment workflows. It is designed to reduce the learning curve for optimization but is most beneficial for those with some experience in AI development.

What hardware does VibeTensor support?

VibeTensor is optimized for NVIDIA GPUs, leveraging their architecture for maximum performance. Support for other hardware may be limited, so users without NVIDIA devices should check compatibility before adoption.

How does VibeTensor compare to TensorRT?

Both are NVIDIA products, but VibeTensor focuses on AI-driven code generation for runtime optimization, while TensorRT is a more established runtime with manual tuning options. VibeTensor offers greater automation, potentially making it easier for users who prefer a hands-off approach.

Are there any known limitations or challenges with VibeTensor?

As a new release, VibeTensor may have limited community support and documentation compared to mature tools. Additionally, its effectiveness is tied to NVIDIA hardware, which could be a barrier for some users. It is advisable to test it in a development environment before full deployment.

What kind of support and documentation is available for VibeTensor?

NVIDIA typically provides comprehensive documentation, tutorials, and support through their developer portals and enterprise channels. Users can expect resources to help with integration and troubleshooting, but the depth may vary as the tool is new.

Can VibeTensor be integrated with existing AI workflows?

Yes, VibeTensor is designed to work with popular frameworks like PyTorch and TensorFlow, allowing integration into existing pipelines. It aims to complement rather than replace current tools, enhancing deployment efficiency.

What is the pricing model for VibeTensor?

Pricing details have not been officially released. It is likely part of NVIDIA’s enterprise offerings or available through cloud partnerships. Users should refer to NVIDIA’s website for the latest information on costs and licensing.

What are the best alternatives to VibeTensor?

Alternatives include TensorRT (for NVIDIA GPUs), ONNX Runtime (framework-agnostic), and Apache TVM (open-source optimization). The choice depends on your hardware, framework, and need for automation versus control.

How can I get started with VibeTensor?

To start, visit the official NVIDIA website or NGC for resources and downloads. You may need an NVIDIA GPU and appropriate drivers. NVIDIA often provides sample projects and documentation to guide initial setup and experimentation.

How would you rate Groundbreaking NVIDIA AI Generates Autonomous Deep Learning Runtime?