🎯 KEY TAKEAWAY

If you only take one thing from this, make it these.

Hide

- Google and OpenAI accuse Microsoft-backed OpenAI and other companies of using “distillation” to clone their models cheaply

- Distillation transfers knowledge from large AI models to smaller, cheaper ones, potentially undermining original developers’ competitive edge

- The practice raises concerns about intellectual property, model security, and revenue loss for leading AI companies

- Industry leaders are now calling for clearer rules and technical safeguards to protect proprietary AI research

Google and OpenAI Raise Alarm Over Distillation Attacks

Google and OpenAI have both raised concerns about “distillation attacks,” a technique where companies copy the capabilities of their advanced AI models at a fraction of the cost. According to reports from The Decoder, both tech giants warn that this practice threatens their business models and intellectual property rights. Distillation allows smaller models to mimic the behavior of larger, more expensive ones by training on outputs generated by the original systems.

The issue has become particularly sensitive given the close ties between major AI players. Microsoft, a key OpenAI partner, has faced scrutiny over whether its own AI efforts may benefit from distillation techniques. The practice is technically legal but ethically controversial, as it can undercut the market for premium AI services by enabling cheaper knockoffs. Google and OpenAI argue that without proper safeguards, the economic incentives for frontier AI research could disappear.

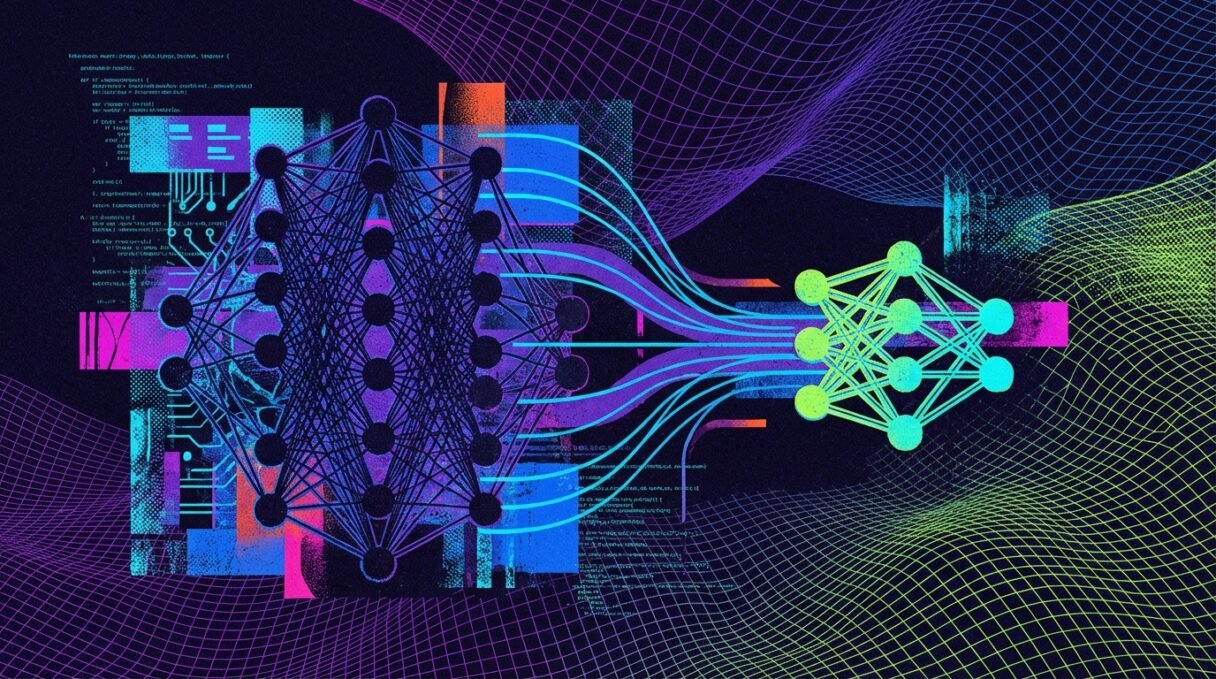

What Is Model Distillation?

Model distillation is a machine learning technique that transfers knowledge from a large, powerful model to a smaller, more efficient one:

How it works:

- A large “teacher” model generates outputs (text, predictions, classifications)

- A smaller “student” model trains on these outputs instead of raw data

- The student learns to approximate the teacher’s behavior with far fewer resources

- This reduces inference costs by up to 90% compared to running the full model

Why it matters to AI companies:

- Revenue impact: Customers could use distilled models instead of paying for API access to the original

- Competitive threat: Rivals can quickly replicate capabilities without the massive R&D investment

- Security risks: Distillation may expose model vulnerabilities and training methodologies

- IP concerns: No clear legal framework exists for protecting model “style” or behavior

Industry Response and Next Steps

Both Google and OpenAI are exploring technical and policy solutions to address distillation risks:

Potential countermeasures:

- Output watermarking: Embedding detectable patterns in AI responses to track unauthorized training

- API monitoring: Detecting unusual usage patterns that suggest large-scale distillation attempts

- Rate limiting: Restricting the volume of outputs any single user can generate

- Legal frameworks: Developing new IP protections for AI model outputs and behaviors

Broader implications:

- Innovation slowdown: If distillation becomes widespread, companies may reduce investment in frontier models

- Open vs. closed debate: The controversy fuels arguments for and against open-source AI development

- Regulatory attention: Policymakers may need to address AI IP rights in upcoming technology regulations

Conclusion

Google and OpenAI’s concerns about distillation attacks highlight a growing tension in the AI industry between innovation, competition, and intellectual property protection. As the technology matures, the question of who owns and controls AI model capabilities becomes increasingly critical for the business models driving the field forward.

The industry must balance protecting investments in cutting-edge AI research with fostering healthy competition and accessibility. Technical safeguards, legal frameworks, and industry standards will likely emerge as key battlegrounds in the coming months. Companies developing AI should monitor this space closely and consider how their model access policies might be exploited or protected.

FAQ

What is a distillation attack in AI?

A distillation attack occurs when a company uses a large AI model’s outputs to train a smaller, cheaper model that mimics the original’s capabilities. This bypasses the need for expensive research and development while potentially undercutting the original model’s market value.

Why are Google and OpenAI concerned about this practice?

Both companies invest billions in developing cutting-edge AI models. Distillation allows competitors to clone their capabilities at minimal cost, threatening their revenue models and reducing incentives for frontier research. It also raises intellectual property and security concerns.

Is model distillation illegal?

Currently, distillation exists in a legal gray area. While using publicly available AI outputs for training isn’t explicitly prohibited, companies argue it violates terms of service and undermines the economic foundation of AI development. No clear legal precedent exists yet.

How can companies prevent distillation?

Potential measures include output watermarking, API monitoring for suspicious usage patterns, rate limiting, and implementing stricter terms of service. However, these technical solutions may be difficult to enforce and could impact legitimate users.

Which companies are most affected by this issue?

Leading AI labs like Google, OpenAI, and Anthropic face the greatest risks since they invest heavily in frontier models. However, the practice affects the entire ecosystem, including startups and open-source developers who rely on proprietary models for research.

What happens next in this debate?

The industry is likely to see increased calls for regulatory clarity on AI IP rights, development of technical countermeasures, and potential legal challenges. Companies may also revise their terms of service and API policies to explicitly prohibit distillation attempts.

How would you rate Cloning AI Models on the Cheap: A Disruptive Innovation??