🎯 KEY TAKEAWAY

If you only take one thing from this, make it these.

Hide

- This upgrade makes OpenAI a stronger contender for building complex, stateful AI agents that can perform multi-step tasks.

- The key benefits are persistent memory, advanced tool use, and improved reliability, which are crucial for long-running applications.

- Ideal for developers creating sophisticated customer support bots, personal assistants, and enterprise automation tools.

- While powerful, developers must be mindful of the potential for high token costs on long-running tasks.

- Compared to alternatives, it offers tight integration with the OpenAI ecosystem, which can be a major advantage for existing users.

Introduction

OpenAI has upgraded its Responses API with features specifically built for long-running AI agents. This update addresses a critical challenge in AI development: maintaining state, context, and reliability over extended interactions without complex external infrastructure. It’s designed for developers, startups, and enterprises building persistent AI agents that need to operate reliably over time. The key benefits are enhanced memory capabilities, improved tool integration, and more robust error handling, allowing for the creation of sophisticated, stateful AI applications.

Key Features and Capabilities

The core of this upgrade focuses on three main areas: persistent memory, advanced tool use, and improved reliability. Persistent memory allows an agent to recall previous conversations and user data, creating a more personalized and coherent experience. This is a significant step up from the standard, stateless nature of typical API calls.

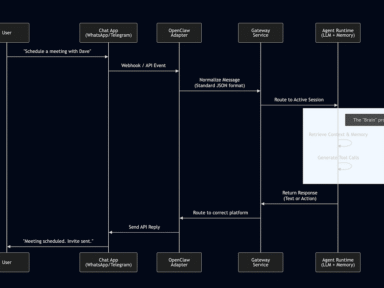

Advanced tool use is another cornerstone. The API now better supports agents that can sequentially call multiple tools, make decisions based on outputs, and correct their course. For example, an agent could book a flight, then use that confirmation to book a hotel, and finally add both to a calendar, all within a single, long-running process. This is crucial for complex, multi-step automation. Reliability features include better timeouts and retry logic, which are essential for agents that may run for minutes or even hours.

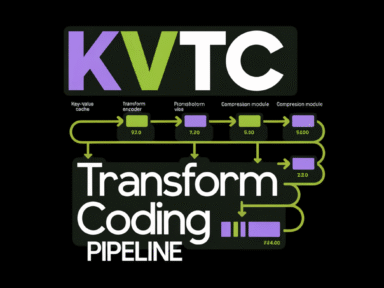

How It Works / Technology Behind It

The technology builds on the foundation of the Chat Completions API but adds a stateful layer. Instead of treating each API call as an independent event, the Responses API can maintain a “session” or “conversation” context. Developers can pass a conversation ID or state object, and OpenAI’s infrastructure manages the context, including tool call history and user-specific data.

This is achieved by structuring the API to handle a sequence of requests and responses that are logically linked. The model is given access to a history of tool calls and their results, allowing it to reason about the next step in a complex workflow. This architecture is fundamentally different from alternatives like Anthropic’s Claude models, which also support long-running tasks but may require different implementation patterns. The key advantage for OpenAI’s offering is its tight integration with its ecosystem of models like GPT-4o and o1, potentially offering a more seamless experience for developers already invested in their platform.

Use Cases and Practical Applications

The practical applications for these upgraded capabilities are extensive. For customer support, an agent can now handle an entire user journey, from initial problem identification to resolution and follow-up, remembering details from the start to the end of the interaction. This is a major improvement over simple, one-shot chatbots.

In the realm of personal assistants, these agents can manage complex projects. Imagine an agent that helps plan a vacation by researching destinations, comparing prices, booking reservations, and creating a detailed itinerary, all while remembering the user’s preferences for budget and activities. For enterprise automation, this could mean an agent that monitors business metrics, identifies anomalies, initiates corrective actions through various APIs, and reports on the outcome. These use cases move beyond simple Q&A to true task completion and workflow automation.

Pricing and Plans

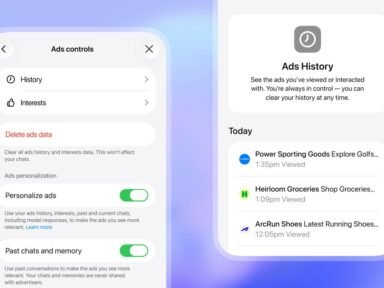

Pricing for the Responses API is based on token usage, similar to other OpenAI models. The cost will depend on the specific model used (e.g., GPT-4o, o1), the number of input and output tokens, and the complexity of the tasks (including any tool calls). There are no separate fees for the stateful features themselves; they are part of the API’s functionality.

For the most current and detailed pricing information, it is essential to visit the official OpenAI pricing page. Enterprise customers may have access to custom pricing plans and dedicated support. It’s also important to note that long-running agents can consume a significant number of tokens, so costs can accumulate quickly for highly complex or frequent tasks.

Pros and Cons / Who Should Use It

Pros:

- Stateful Operations: Native support for long-running, context-aware agents is a game-changer.

- Seamless Tool Integration: Simplifies building complex, multi-step workflows.

- Robustness: Improved reliability features are essential for production-grade agents.

- Ecosystem Integration: Works well with other OpenAI services and models.

Cons:

- Cost: Long-running tasks can become expensive due to token consumption.

FAQ

What is the main difference between the Responses API and the standard Chat Completions API?

The standard Chat Completions API is stateless, meaning each request is treated as an isolated event. The Responses API, with these new features, is designed to be stateful, maintaining conversation context, tool call history, and user data over long-running sessions. This is essential for building agents that can complete complex, multi-step tasks.

Is this suitable for building a simple chatbot?

Yes, but it might be more power than you need for a simple, one-off Q&A bot. The stateful features are most beneficial for applications that require memory and sequential actions. For a basic chatbot, the standard API might be more cost-effective and simpler to implement.

How does the pricing work for long-running agents?

Pricing is based on token usage (input, output, and tool calls). The longer and more complex the agent’s task, the more tokens it will consume, leading to higher costs. It’s crucial to monitor token usage and optimize your agent’s prompts and workflows to manage expenses.

Are there good alternatives to OpenAI for building long-running agents?

Yes, Anthropic’s Claude models are a strong alternative, known for their large context windows and reasoning capabilities. Other platforms like LangChain or custom-built solutions using open-source models also exist. The best choice depends on your specific needs regarding cost, performance, and ecosystem integration.

Do I need a high level of technical expertise to use this API?

While the API simplifies the infrastructure for stateful agents, you still need a solid understanding of API integration, prompt engineering, and potentially software development (e.g., Python or Node.js) to build effective applications. The learning curve is moderate to steep, depending on the complexity of your agent.

How would you rate OpenAI Unveils Groundbreaking Responses API for Persistent AI Agents?