🎯 KEY TAKEAWAY

If you only take one thing from this, make it these.

Hide

- New research reveals a fundamental conflict between AI agent security and usefulness, forcing a trade-off

- The more autonomy and tools an agent has, the harder it becomes to prevent misuse or jailbreaks

- This tension affects developers, enterprises, and anyone building or deploying AI agents

- Future solutions may require new security paradigms rather than just better model training

- The finding challenges the assumption that more capable agents are always better

AI Agents Face Security and Usefulness Trade-Off

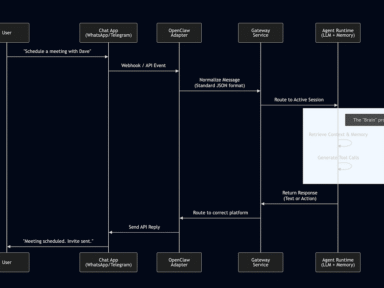

A new study reveals that AI agents face an uncomfortable truth where security and usefulness are in direct competition. The research, reported by The Decoder, shows that as agents become more capable and autonomous, preventing misuse becomes increasingly difficult. This creates a fundamental tension for developers trying to build both powerful and safe AI systems.

The core issue lies in the agent’s architecture. More useful agents need access to tools, data, and decision-making power. But each additional capability creates a new potential attack surface for malicious actors. This makes the security challenge exponentially harder as agents become more capable.

The Security-Usefulness Paradox

The research identifies several key factors driving this conflict:

Why more capabilities create more risk:

- Tool access: Agents connected to external APIs or systems can be manipulated to perform unauthorized actions

- Autonomy: Greater decision-making freedom makes it harder to predict and control agent behavior

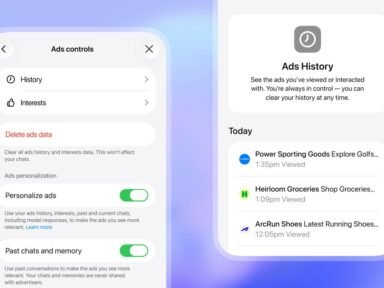

- Memory and context: Agents that remember past interactions can be tricked into revealing sensitive information

- Multi-step reasoning: Complex reasoning chains are harder to audit and verify for safety

The jailbreak problem:

- Prompt injection: Attackers can hide malicious instructions in seemingly harmless inputs

- Chain-of-thought exploits: Complex reasoning processes can be hijacked to reach unsafe conclusions

- Tool misuse: Even benign tools can be combined in harmful ways that are difficult to anticipate

Impact on AI Development

This tension has immediate consequences for how AI agents are built and deployed:

For developers:

- Security overhead: Every new capability requires extensive safety testing and monitoring

- Development complexity: Building secure agents requires expertise in both AI and cybersecurity

- Testing challenges: It’s nearly impossible to anticipate every possible misuse scenario

For enterprises:

- Risk assessment: Companies must weigh productivity gains against potential security breaches

- Deployment decisions: Some useful agent capabilities may be too risky to implement

- Compliance concerns: Regulators are increasingly scrutinizing AI agent security

For the industry:

- Innovation slowdown: Security concerns may delay the release of more advanced agents

- Market differentiation: Companies that solve this problem could gain significant competitive advantage

- Research focus: Academic and industry labs are prioritizing security research

Current Approaches and Limitations

Current security methods struggle with this fundamental trade-off:

Traditional security measures:

- Content filtering: Can block obvious harmful requests but misses sophisticated attacks

- Access controls: Limit what agents can do but reduce their usefulness

- Monitoring and auditing: Help detect misuse but can’t prevent it in real-time

- Sandboxing: Isolates agents but limits their ability to interact with real systems

Why these methods fall short:

- Adversarial evolution: Attackers continuously develop new bypass techniques

- Complexity barrier: Security measures that work for simple agents fail at scale

- False positives: Overly restrictive security can break legitimate agent functionality

Future Directions and Solutions

Researchers are exploring new approaches to this problem:

Emerging security paradigms:

- Formal verification: Mathematical proof that agents will behave safely under all conditions

- Adversarial training: Exposing agents to attack scenarios during development

- Human-in-the-loop: Keeping humans involved in critical decision points

- Capability limitation: Designing agents with inherent safety constraints

Industry responses:

- Security-first design: Building safety into agent architecture from the ground up

- Red teaming: Dedicated teams try to break agents before release

- Transparency initiatives: Sharing security research and best practices

- Collaborative standards: Industry groups developing common security frameworks

Research priorities:

- Interpretable AI: Understanding why agents make certain decisions

- Robustness testing: Ensuring agents behave safely under unexpected conditions

- Scalable security: Developing methods that work as agents become more complex

Conclusion

The research confirms that AI agent security and usefulness exist in direct tension, creating a fundamental challenge for the field. As agents become more capable, preventing misuse becomes exponentially harder, forcing developers to make difficult trade-offs between functionality and safety.

This finding suggests that future progress in AI agents will require entirely new security approaches rather than incremental improvements to existing methods. The companies and researchers who solve this problem will likely define the next generation of AI systems, while those who ignore it may face serious security failures. The industry must prioritize security innovation alongside capability development to realize the full potential of AI agents.

FAQ

What is the core trade-off between AI agent security and usefulness?

The core trade-off is that more capable AI agents require more autonomy, tools, and decision-making power, which simultaneously creates more opportunities for misuse and attack. As agents become more useful, they also become harder to secure against jailbreaks, prompt injections, and other adversarial techniques.

Why can’t we just train agents to be both secure and useful?

Training alone is insufficient because adversarial actors continuously develop new attack methods. The complexity of modern AI systems makes it nearly impossible to anticipate every potential misuse scenario during training. Security requires ongoing monitoring, testing, and architectural safeguards beyond just model training.

What types of agents are most affected by this trade-off?

Agents with access to external tools, APIs, or sensitive data face the greatest security challenges. Multi-step reasoning agents, those with memory capabilities, and agents that can make autonomous decisions are particularly vulnerable. The more an agent can do, the more security measures are needed.

Are there any promising solutions being developed?

Researchers are exploring formal verification methods, adversarial training techniques, and human-in-the-loop systems. Some companies are adopting security-first design principles and intensive red teaming before release. However, scalable solutions that work for highly capable agents remain an active research area.

How does this affect enterprises deploying AI agents?

Enterprises must carefully weigh the productivity benefits of powerful agents against potential security risks and compliance concerns. Some useful capabilities may need to be limited or delayed until adequate security measures are developed. This adds complexity and cost to AI deployment projects.

What should developers consider when building AI agents?

Developers should prioritize security from the initial design phase rather than treating it as an afterthought. This includes implementing proper access controls, monitoring systems, and testing for adversarial scenarios. They should also consider the principle of least privilege and design agents with inherent safety constraints.

How would you rate AI Agents Grapple with Security-Usefulness Tradeoffs?