🎯 KEY TAKEAWAY

If you only take one thing from this, make it these.

Hide

- ByteDance’s Jimeng AI is a powerful text-to-video model capable of generating 10-second, 1080p clips with high visual fidelity and temporal consistency.

- It is designed to lower the barrier to video production, making it ideal for social media marketers, content creators, and creative professionals for tasks like ad creation, storyboarding, and explainer videos.

- The tool features a user-friendly interface and leverages advanced diffusion technology, but access is currently limited and prompt engineering is key to achieving optimal results.

- While pricing for a global release is not yet finalized, it is expected to use a credit-based system, offering a competitive value proposition against alternatives like Runway, Luma AI, and Pika.

- Its main strengths lie in its ease of use and high-quality output, but limitations include short clip duration and regional availability, making it a strong contender but not a complete replacement for all video production needs.

The landscape of AI video generation has been rapidly evolving, with new models challenging the dominance of established players. Recently, ByteDance, the company behind TikTok and CapCut, released Jimeng AI, a text-to-video model that has garnered attention for its ability to generate high-quality, coherent clips from simple text prompts. This tool addresses a significant problem for creators: the high cost and technical barrier of traditional video production. It is designed for social media managers, content creators, and marketing professionals who need to produce engaging visual content quickly and efficiently. By leveraging ByteDance’s vast data and computational resources, Jimeng AI promises a blend of accessibility and high-fidelity output, making professional-grade video creation more attainable for a broader audience.

Key Features and Capabilities

Jimeng AI stands out with its impressive technical specifications. The model can generate videos up to 10 seconds long at 1080p resolution, which is a competitive offering compared to many current tools that struggle with consistency over longer durations. A key feature is its “multi-modal” understanding, which allows it to interpret complex prompts involving specific actions, camera movements, and stylistic elements. For instance, a prompt like “A cinematic shot of a glass of water on a wooden table, with morning sunlight filtering through a window, slow-motion ripple on the surface” can result in a visually stunning and physically plausible clip. It also supports image-to-video generation, where a static image can be animated based on a text description, adding another layer of creative control. This capability is particularly useful for transforming illustrations or product photos into dynamic marketing assets.

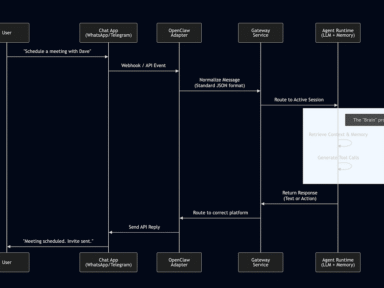

How It Works / Technology Behind It

At its core, Jimeng AI utilizes a sophisticated diffusion model architecture, similar to those behind tools like Sora or Runway Gen-3, but optimized for efficiency and speed. ByteDance has leveraged its immense computational infrastructure to train the model on a vast and diverse dataset of video content, enabling it to learn complex physical dynamics and aesthetic styles. The technology focuses on temporal consistency, ensuring that objects and scenes remain coherent between frames, a common challenge in earlier AI video models. While the exact architecture details are proprietary, the performance suggests significant advancements in latent space manipulation for video. The model processes text prompts through a natural language understanding component, which then guides the video generation process frame by frame, resulting in a cohesive final product.

Use Cases and Practical Applications

The practical applications for Jimeng AI are extensive, particularly for digital content workflows. Social media marketers can use it to create eye-catching ads or reels without the need for a video shoot, turning a simple product description into a dynamic visual story. For instance, a fashion brand could generate a video of a model walking down a runway in a specific style, all from a text prompt. Educators and instructional designers can create animated explainer videos quickly, visualizing complex concepts that would otherwise require hours of animation work. Furthermore, independent filmmakers and storyboard artists can use it for pre-visualization, rapidly generating scene ideas to test camera angles and lighting before committing to a full production. This tool is ideal for anyone needing to prototype video concepts or produce short-form content at scale.

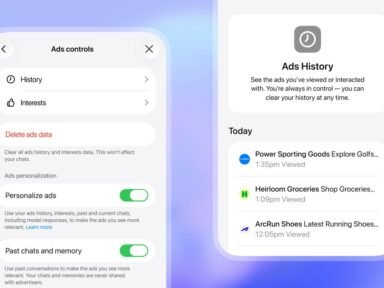

Pricing and Plans

As of its initial release, Jimeng AI is available to users in China, primarily through the “Dreamina” app, which is ByteDance’s existing AI image and video platform. Access for international users has been limited, but the model is expected to be integrated into ByteDance’s global creative suite, potentially including CapCut. Pricing details for a wider release are not yet fully public, but the model is likely to follow a credit-based system, similar to competitors like Luma AI or Pika. Users might receive a free daily allowance of credits to generate a few videos, with subscription tiers offering more generations per month. For professional use, enterprise-level pricing could offer API access for seamless integration into larger marketing and content creation pipelines. The value proposition is strong, especially for users already invested in the ByteDance ecosystem.

Pros and Cons / Who Should Use It

Pros:

- High-Quality Output: Generates visually appealing and coherent 1080p video clips.

- Ease of Use: Simple text-to-video interface lowers the barrier to entry.

- Fast Generation: Leverages efficient architecture for quicker processing times.

- Backed by ByteDance: Benefits from the company’s massive R&D and data resources.

Cons:

- Limited Access: Currently restricted in some regions, with a waitlist for international users.

- Short Clip Length: The 10-second limit may not suffice for longer-form content without editing.

- Learning Curve: Crafting prompts that yield desired results requires practice and experimentation.

- Potential for Bias: Like all AI models, it may reflect biases present in its training data.

Who Should Use It: Jimeng AI is best suited for social media content creators, digital marketers, and small business owners who need to produce high-quality video ads and posts quickly. It is also valuable for creative professionals like designers and filmmakers for brainstorming and storyboarding. Users who are already comfortable with the ByteDance ecosystem (TikTok, CapCut) will find it particularly easy to integrate into their workflow. Those requiring long-form, narrative-driven video production may need to wait for future updates or use it in conjunction with traditional editing software.

FAQ

What is Jimeng AI and how does it compare to Runway or Sora?

Jimeng AI is ByteDance’s text-to-video generation model, designed to create short, high-quality video clips from text prompts. Compared to Runway and Sora, it offers a competitive alternative with a focus on user accessibility and integration with the ByteDance ecosystem. While Sora is known for its longer, highly coherent sequences, Jimeng focuses on delivering high-fidelity 10-second clips, positioning it as a strong tool for social media and marketing content.

How much does Jimeng AI cost?

Official pricing for a global release is not yet available. In China, it is accessible via the Dreamina app, which likely uses a freemium or credit-based model. Users can expect a free tier with limited generations and paid subscriptions for more credits, similar to pricing structures from competitors like Luma AI and Pika. Keep an eye on official announcements from ByteDance for international pricing details.

Is Jimeng AI easy to use for beginners?

Yes, Jimeng AI is designed with a user-friendly, text-to-video interface that is accessible to beginners. The primary skill required is effective prompt engineering, which involves learning to describe scenes, actions, and styles clearly. While there is a learning curve to master prompt crafting, the tool does not require any video editing or technical expertise to start generating content.

What are the main limitations of Jimeng AI?

The main limitations include a 10-second clip length, which may require editing for longer narratives. Currently, access is primarily available in China, with a waitlist for other regions. Additionally, like all AI video tools, it can sometimes produce inconsistent results or artifacts, and users may need to generate multiple versions to get the desired output.

Are there good alternatives to Jimeng AI?

Yes, there are several strong alternatives. Runway Gen-3 is a leading professional tool with extensive features. Luma AI and Pika are also popular for their high-quality text-to-video generation. For users focused on social media, CapCut (also from ByteDance) offers robust video editing with some AI features. The best alternative depends on your specific needs, budget, and desired level of creative control.

How would you rate ByteDance’s Groundbreaking AI Video Innovations?