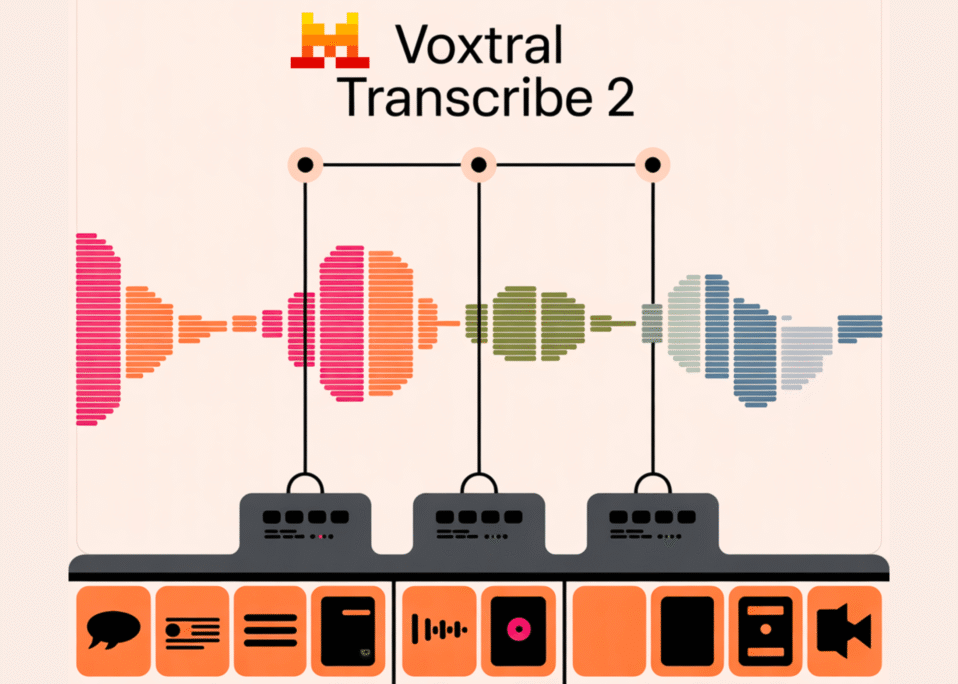

Mistral AI has officially entered the audio transcription and understanding race with the launch of **Voxtral Transcribe 2**, a model designed to bridge the gap between batch processing and real-time streaming. This tool addresses a critical pain point in the AI ecosystem: the fragmentation between high-accuracy batch transcription (ideal for archives) and low-latency real-time speech recognition (ASR) needed for live applications. By combining these capabilities with native multilingual support and speaker diarization, Voxtral targets developers, enterprises, and researchers looking to build sophisticated voice-enabled applications without managing a complex pipeline of disparate tools.

Key Features and Capabilities

Voxtral Transcribe 2 distinguishes itself with a robust feature set that prioritizes practical utility over raw benchmarks alone. The model is built on Mistral’s established NLP architecture, allowing it to handle not just speech-to-text, but also semantic understanding of the audio content.

**Core Capabilities:**

* **Batch and Real-Time Modes:** Unlike many competitors that force a choice between the two, Voxtral supports both. The batch mode is optimized for high-throughput processing of pre-recorded files (interviews, meetings), while the real-time API handles live audio streams with low latency, making it suitable for captioning or live transcription.

* **Speaker Diarization:** The model automatically identifies “who spoke when.” This is crucial for meeting minutes and interview analysis, removing the manual effort of separating speakers.

* **Multilingual Support:** Voxtral is natively multilingual, supporting over 30 languages including English, Spanish, French, German, and Hindi. It handles code-switching (mixing languages within a single sentence) better than many legacy ASR systems.

* **Word-Level Timestamps:** Every transcribed word comes with precise timing, essential for subtitling, video editing workflows, and synchronizing text with media.

* **Audio Intelligence:** Beyond transcription, the model can extract key information, summaries, and action items directly from the audio, leveraging Mistral’s strong NLP background.

How It Works / Technology Behind It

Voxtral Transcribe 2 utilizes a transformer-based architecture, likely fine-tuned on a massive corpus of diverse audio data. The technology relies on a self-supervised learning approach similar to wav2vec 2.0 but optimized for Mistral’s specific training methodologies.

The “pairing” mentioned in the launch refers to the unified architecture that shares weights between batch and streaming tasks. This means the model maintains consistent accuracy regardless of the input format. For real-time processing, it uses a sliding window attention mechanism to process audio chunks as they arrive, minimizing the “time to first token.” For diarization, it employs an embedding-based clustering method that analyzes voice characteristics alongside semantic context to attribute speech segments accurately.

Use Cases and Practical Applications

The versatility of Voxtral Transcribe 2 makes it applicable across various sectors:

* **Customer Support Analytics:** Call centers can use the batch API to transcribe thousands of hours of recorded calls. The diarization feature separates agent and customer speech, while the NLP layer can automatically tag sentiment and identify compliance issues.

* **Media and Content Creation:** Podcasters and video producers can utilize the real-time API for live captioning during streams or the batch mode to generate subtitles for pre-recorded content. The word-level timestamps allow for precise synchronization.

* **Legal and Compliance:** Law firms can process deposition recordings. The high accuracy in multilingual contexts is vital for international litigation where transcripts must be precise and speaker attribution is legally significant.

* **Research and Academia:** Researchers conducting interviews in multiple languages can rely on Voxtral to handle transcription without needing separate tools for different languages or speaker separation.

Pricing and Plans

Mistral AI has adopted a token-based pricing model, which is standard for the industry but offers competitive rates for Voxtral.

* **Input Pricing:** Priced per minute of audio (or per 1,000 tokens, depending on the specific API implementation). Estimates suggest it is positioned slightly below premium competitors like GPT-4o Audio or Google’s Speech-to-Text for high-volume tiers.

* **Real-Time vs. Batch:** Batch processing is generally more cost-effective due to higher throughput efficiency. Real-time streaming incurs a premium for the low-latency infrastructure.

* **Free Tier:** Mistral typically offers a generous free tier for developers to test the API (e.g., the first 10-30 minutes free).

* **Enterprise:** Custom pricing is available for on-premise deployment or dedicated GPU clusters, ensuring data privacy for sensitive industries.

*For the most current pricing, users should visit the [Mistral AI Platform](https://mistral.ai/).*

Pros and Cons / Who Should Use It

**Pros:**

* **Unified Architecture:** Developers do not need separate APIs for streaming and batch processing, simplifying codebases.

* **Strong Multilingual Performance:** Excels in non-English languages compared to many open-source alternatives.

* **Integration with Mistral Ecosystem:** Seamlessly connects with Mistral’s other models (e.g., Le Chat) for end-to-end audio reasoning workflows.

**Cons:**

* **Latency:** While low, real-time latency may still be slightly higher than highly optimized, specialized ASR providers like Deepgram or AssemblyAI in edge-case scenarios.

* **Dependency:** Being a cloud API, it requires an internet connection, which may not suit air-gapped environments (though on-premise options exist).

* **Learning Curve:** Users unfamiliar with Mistral’s API structure may need time to adapt compared to more beginner-friendly platforms like OpenAI.

**Who Should Use It?**

Voxtral Transcribe 2 is ideal for **mid-to-large scale enterprises** and **AI developers** building products that require robust audio processing. It is particularly well-suited for teams already invested in the Mistral ecosystem who want to add voice capabilities without switching vendors. It is less ideal for hobbyists looking for a simple, drag-and-drop desktop app.

Takeaways

* **Unified Workflow:** Voxtral Transcribe 2 combines batch and real-time ASR into a single model, reducing integration complexity for developers.

* **Multilingual Edge:** The model offers superior handling of mixed-language conversations and supports over 30 languages, making it a strong choice for global applications.

* **Speaker Intelligence:** Built-in diarization and word-level timestamps provide actionable data for analytics, compliance, and media production.

* **Competitive Pricing:** Positioned as a cost-effective alternative to premium providers like GPT-4o Audio, especially for high-volume batch processing.

* **Best Fit:** Ideal for enterprises and developers needing scalable, API-driven audio intelligence rather than standalone desktop software.

FAQ

Is Voxtral Transcribe 2 available for free?

Mistral AI offers a free tier for developers to test the API, typically with a limited number of free minutes or tokens. For production-scale usage, you will need to subscribe to a paid plan based on audio minutes or tokens processed.

How does Voxtral compare to OpenAI’s Whisper or GPT-4o Audio?

Voxtral competes directly with Whisper and GPT-4o. While Whisper is open-source and highly popular, Voxtral offers a managed API experience with better streaming support. Compared to GPT-4o, Voxtral is often more cost-effective for pure transcription tasks, though GPT-4o offers deeper multimodal reasoning if you need to analyze audio content beyond just text.

Can I use Voxtral for real-time transcription in a live app?

Yes, Voxtral Transcribe 2 supports real-time ASR. It is designed to handle streaming audio with low latency, making it suitable for live captioning, meeting assistants, and voice command interfaces.

What languages does Voxtral Transcribe 2 support?

The model supports over 30 languages, including English, Spanish, French, German, Italian, Portuguese, and various Indian languages. It is designed to handle code-switching, where speakers mix two languages in the same sentence.

Does Mistral AI provide support for on-premise deployment?

Yes, Mistral AI offers enterprise plans that include options for private cloud or on-premise deployment. This is essential for industries with strict data privacy regulations, such as healthcare or finance.

What is the accuracy of the diarization feature?

Voxtral’s diarization is state-of-the-art, using advanced embedding techniques to distinguish between speakers. However, accuracy can vary based on audio quality, background noise, and the number of speakers. It performs best in clear, studio-quality recordings but remains robust in standard meeting environments.

How would you rate Mistral AI Unveils Voxtral Transcribe 2: Groundbreaking Multilingual ASR?