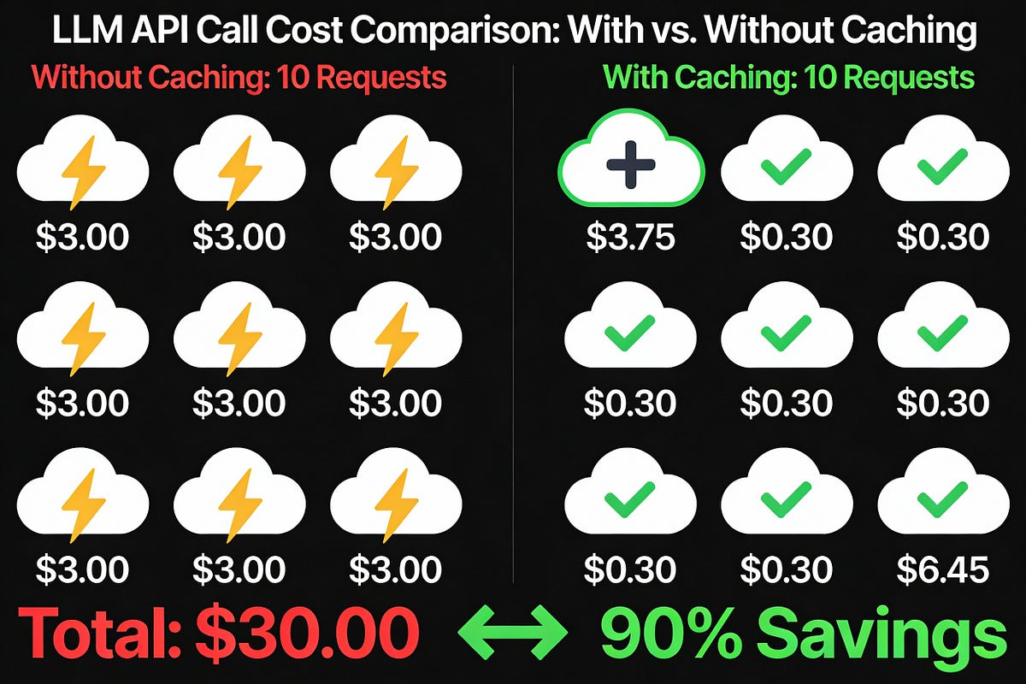

LLM API Token Caching: The 90% Cost Reduction Feature when building AI Applications

Introduction

In the rapidly evolving landscape of AI and machine learning, the ability to effectively manage costs is a critical consideration for developers and businesses. This is where LLM API Token Caching shines, offering a game-changing solution that can significantly reduce the financial burden of building AI applications.

LLM API Token Caching is a powerful tool designed to optimize the usage of large language model (LLM) APIs, such as GPT-3 and Anthropic’s Claude, by intelligently caching and reusing API tokens. This innovative approach can lead to cost savings of up to 90% for organizations that heavily rely on these AI services, making it an essential consideration for anyone building AI-powered applications.

Key Features and Capabilities

The core functionality of LLM API Token Caching revolves around its ability to cache and reuse API tokens, reducing the need to make repeated calls to the LLM API. By maintaining a cache of previously used tokens, the tool can dramatically reduce the number of API calls required, leading to substantial cost savings.

One of the standout features of LLM API Token Caching is its seamless integration with popular LLM APIs. The tool supports a wide range of providers, including OpenAI, Anthropic, and Hugging Face, allowing developers to easily incorporate it into their existing AI workflows.

How It Works / Technology Behind It

Under the hood, LLM API Token Caching leverages advanced caching algorithms and techniques to optimize API usage. It monitors the request patterns and token usage within an application, intelligently identifying opportunities to reuse tokens and minimize the need for new API calls.

The tool’s architecture is designed to be scalable and efficient, with the ability to handle high-volume API usage without compromising performance. It utilizes a combination of in-memory and persistent caching mechanisms to ensure that cached tokens are readily available and durable, even in the face of application restarts or infrastructure changes.

Use Cases and Practical Applications

The benefits of LLM API Token Caching are particularly evident in scenarios where organizations are heavily reliant on LLM APIs for their AI-powered applications. This includes use cases such as:

1. **Conversational AI**: Chatbots, virtual assistants, and other conversational AI systems that leverage LLM APIs for natural language processing and generation.

2. **Content Creation**: Applications that generate text-based content, such as articles, stories, or product descriptions, using LLM APIs.

3. **Personalization and Recommendation**: Systems that utilize LLM APIs to provide personalized recommendations or insights based on user data.

4. **Code Generation**: Tools that leverage LLM APIs to assist developers in generating code, automating tasks, or providing code suggestions.

By implementing LLM API Token Caching, organizations can significantly reduce their operational costs while maintaining the high-quality AI capabilities that their applications require.

Pricing and Plans

LLM API Token Caching offers a flexible pricing model to accommodate the needs of businesses of all sizes. The tool is available as a self-hosted solution, allowing organizations to deploy and manage it within their own infrastructure, or as a cloud-based service with various subscription plans.

The pricing structure is based on the volume of API calls and the amount of cached data, providing a scalable and cost-effective solution. Customers can choose the plan that best fits their usage patterns and budget, ensuring that they only pay for the resources they need.

Pros and Cons / Who Should Use It

**Pros:**

– Significant cost savings (up to 90%) on LLM API usage

– Seamless integration with popular LLM API providers

– Scalable and efficient architecture to handle high-volume usage

– Flexible pricing model to suit businesses of all sizes

– Improved performance and reduced latency for AI-powered applications

**Cons:**

– Requires some technical expertise to set up and configure

– May not be suitable for applications with highly dynamic or unpredictable API usage patterns

**Who Should Use It:**

LLM API Token Caching is an ideal solution for organizations that:

– Heavily rely on LLM APIs for their AI-powered applications

– Aim to reduce operational costs while maintaining high-quality AI capabilities

– Have a consistent or predictable pattern of API usage

– Possess the technical resources to integrate and manage the tool within their infrastructure

Takeaways

– LLM API Token Caching can reduce API usage costs by up to 90% for organizations building AI applications

– The tool seamlessly integrates with popular LLM API providers, including OpenAI, Anthropic, and Hugging Face

– It leverages advanced caching algorithms to optimize API usage and minimize the need for new API calls

– LLM API Token Caching is particularly beneficial for use cases like conversational AI, content creation, personalization, and code generation

– The tool offers a flexible pricing model and is suitable for businesses of all sizes with consistent API usage patterns

FAQ

How does LLM API Token Caching work?

LLM API Token Caching utilizes advanced caching algorithms and techniques to monitor API usage patterns and intelligently reuse previously obtained tokens. This reduces the need for repeated API calls, leading to significant cost savings.

What are the key benefits of using LLM API Token Caching?

The primary benefits of LLM API Token Caching include up to 90% cost reduction on LLM API usage, seamless integration with popular providers, improved performance and reduced latency for AI-powered applications, and a flexible pricing model to suit businesses of all sizes.

Is LLM API Token Caching easy to integrate and use?

Integrating LLM API Token Caching requires some technical expertise, as it involves setting up and configuring the tool within the existing infrastructure. However, the tool is designed to be user-friendly and provides comprehensive documentation to guide users through the process.

How does LLM API Token Caching compare to other cost optimization solutions for LLM APIs?

LLM API Token Caching is unique in its focus on intelligent caching and token reuse, which sets it apart from other cost optimization solutions that may rely on techniques like usage monitoring or budget management. Its seamless integration with a wide range of LLM API providers also gives it a competitive advantage.

What type of support and documentation is available for LLM API Token Caching?

The LLM API Token Caching provider offers comprehensive documentation, including step-by-step guides, integration tutorials, and best practices. They also provide customer support channels for users to address any questions or issues that may arise during the implementation and use of the tool.

How would you rate Unlock 90% Cost Savings with Groundbreaking LLM API Caching?